Not sure who maintains the ITopinions.com blog but thanks for adding GotITSolutions.org to your bogroll.

Category Archives: General Discussion

Tragic demise of an Open Source pioneer…

I have been meaning to blog on this for weeks but just never got around to it. Today I had a call and that reminded me. On October 10th Hans Reiser was taken into custody by Oakland, CA authorities on suspicion of murdering his wife. Hans Reiser is the developer of the popular Linux file system ReiserFS. Based on this Novell SuSE Linux have announced that they will move away from ReiserFS to the ext3 file system. Novell claims that the move to ext3 is prompted by stability issues associated with Reiser4 and that ext3 will soon match the performance of ReiserFS. One has to wonder if the fact that Hans Reiser is on trial for the murder of his wife played a part in this decision – If convicted Hans may have nothing to do but work on the code, does anyone know what the computers are like in the California penal system? 🙂 More interesting to me and a little know or unadvertised fact is that the EMC Centera (great EMC Centera internals presentation) product leverages the ReiserFS, a move away from ReiserFS may not be as easy for EMC. For those of you who need validation of Centera’s use of the ReiserFS maybe this thread will help. EMC aquired the Cetera technology from Brussels, Belgium based FilePool NV in April 2001, the acquisition was reported as cash deal valued at less than 50 million dollars (aka – a home run!).

The Hans Reiser pre-trial commenced this Monday, Hans has entered a plea of not guilty. ReiserFS developent is obviously stalled for the time being.

Read more about the Hans Reiser story here.

The “Trusted Advisor” and the “Trusted Relationship”

So this rant was conceived one night while laying in bed and thinking about the term “Trusted Advisor” I am not sure why but I keep getting a vision of a guy trying to sell me a whole life policy – maybe this is because this term is so heavily associated with the insurance and certified financial planning industry – maybe this is why the term “Trusted Advisor” makes me feel like I need to take a shower. 2007 marks my 12th year working in the storage industry in a customer facing role, over the twelve years I have meet with thousands of customer and I can honestly say never once have I billed myself as a “Trusted Advisor” but I have built hundreds of “Trusted Relationships”, I asked myself why the two terms are different to me and the following is what came to mind. Technology is a moving target and as advisors we draw upon market data, practical experience and technical acumen but there are no true guarantees. Is trust and can trust be the product of advice? In my humble opinion trust is built on relationship and a relationship is built on something far less tangible than advice.

I gave this analogy to my wife last night. I have a financial advisor who has delivered me good financial returns over the past 10 years, he considers himself “Trusted Advisor” – I consider him a financial advisor who makes me money because it makes him money. This is not a negative statement but rather reality as he is running a business not a philanthropy. BTW – I don’t “Trust” him, he does a good job and his competence and diligent nature is what keeps him around, he works hard and invests his personal time in me which I value. On the other hand my brother is the worse financial mind possibly in the history of homo sapiens, but we have a trusted relationship, I trust him because of our relationship and the assurances that relationship provides, the fact that I just know he would never intentionally or knowing screw me is what I call “Trust”.

As technologists or financial advisors should we be or is it even possible to gain trust trough advice, in situations where hundreds, maybe even thousands of variables can affect the effectiveness of our advice?

I don’t have the answer, what I do know is that in my experience personal relationships are not replaceable and are not earned through solicited or unsolicited advice. People trust people, not advice or technologies.

Finally I have given advice on solutions over the past 12 years on solutions that made people heroes and I have provided advice that absolutely sucked, sometimes due to my own doing and sometimes due to variables outside my control. The thing that I am most proud of is never abandoned anyone and I have never burnt a relationship – I have always worked hardest to make sure the perception was one of success – ultimately this is the only thing that matters. In my opinion the strong personal bond that is a relationship can not be formed between a corporation and an individual, this will always be the job of individuals and the value of “Trusted Relationship” lies in the hands of the individual. Corporations can only hope to be lucky enough to cash in on the dividends of the “Trusted Relationship”.

So what do this all really mean, well to me advice and trust have nothing to do with each and it should stay that way. On the other hand trust and relationship go hand-and-hand, good advice or bad advice may very well effect these but neither trust nor relationship should be predicated on advice.

Not sure if anyone watches “The Colbert Report” but Steven Colbert uses the word “truthiness” in the political context. Today every “Trusted Advisor” has their own sense of “truthiness”. Betting my relationships or trust on truthiness just does not seem like a good idea 🙂

Well this is definitely a rant. Oh and there is plenty of “truthiness” to go around as well 🙂

Been pretty busy…

So I have been pretty busy the past couple of weeks. Two weeks in Vegas, first the 2006 Gartner Data Center Conference and then Storage Decisions. While I was flying around as always my brain was thinking of the topics that would make for a good rant. So I am working on the following blogs to be published in the coming days:

- The “Trusted Advisor” and the “Trusted Relationship”

- Information Technology Segmentation – Why? How? What?

- Required changes and challenges associated with infrastructure convergence.

Today I am working on the “Trusted Advisor” blog, this blog is one that I believe should prompt some significant discussion.

Completed Microsoft and Novell agreement research

After reading a post from my good friend Alex Weeks at vi411.org about his opinion on the Microsoft and Novell agreement it reminded me that I wanted to post my take after doing some research. I have come to the conclusion as many others have that this was a big win for Microsoft because they now gain access to the “Pike Patent” – for those of you unfamiliar with this patent it is important to note that Microsoft has been in lose violation of it since the dawn of the Windows OS, now any potential problems have evaporated. In short the Pike patent deals with overlapping windows and transparency, originally granted to AT&T and acquired by Novell, Microsoft now has access to this patent. They also gain access to some interesting SMP and clustering patents. My guess this makes sense for Novell because of the huge influx of cash and the “potential” access to Microsoft technology like AD, cifs, etc… Only time will tell but I think that Microsoft actually gained much more from this deal – some how they always do. Novell, the modern day Gary Kildall?

The fixation with virtualization

Heavy focus the evolution toward the virtual infrastructure. Today x86 virtualization is leading the charge but we are moving towards a virtual infrastructure – Does this remind anyone of the the “Utility Computing Discussion that the emergence of the SAN (Storage Area Network) drove? Having just returned from VMworld 2006 where VMware talked about virtualization as a revolution and now sitting at Gartner Data Center Conference 2006 listening to the first keynote frame much of the conference around virtualization the VMware folks would be very proud. Another major point discussed was the driving forces behind what Garner calls Real-Time Infrastructe (to be defined in a later post – ironically I defined something I called JiT (Just-in-Time) Infrastructure two years ago for whatever it’s worth. Gartner defines three driving forces behing the need to move to a Real-Time Infrastructure:

- Economics

- QoS (Quality of Service)

- Agility

I will explain each of these in a follow-up post but it is not hard to see how virtualization is front and center with drivers like these.

Lastly the Gartner analyst talked about how in the future the Hypervisor will become free and the resource management and orchestration will be where the cost will reside. Interested in you thoughts specifically on this comment – if, when and why?

The promise of an active week

This week I will be attending the 25th annual Gartner Data Center Summit in Las Vegas. Today I built my agenda and there are a few sessions that hold some promise. I will attempt to publish my observations and feedback as quickly as possible. I look forward to your opinions on what the Gartner analysts have to say.

Disk I/O a Virtualization focus area…

I believe it is fair to say the disk I/O performance characteristics bare not been the focus of VMware in the past but it seems VMware has taken some strides to address this in VI3. The ADC0135 – Choosing and Architecting Storage for Your Environment session was a bit basic for someone who understands disk technology but I think that over the past few years so much emphasis had been put on server consolidation that much of the VMware community has ignored the disk I/O discussion. I don’t think this was intentional but the value prop was so impressive around consolidation and test/dev that the I/O discussion was not a primary concern, the target audience has often also been server engineering teams and not storage engineering.

During the session the presenters reviewed some rudimentary topics such as SAN, NAS, iciness, DAS and where each is applicable as well as technological differentiators between technologies such as Fibre Channel (FC) and ATA (Advanced Technology Attachment) (e.g. – Tagged Command Queuing). As a proof point the moderator polled the audience of about 300 strong asking if anyone had ever heard off HIPPI (high Performance Parallel Interface) and about 3 people raised their hands. This is understandable as the target audience for VMware had traditionally been the server engineering team and/or developers and not the storage engineers thus the probable lack of a detailed understanding of storage interconnects.

With VMware looking for greater adoption rates in the corporate production IT environment by leveraging new value propositions focused on business continuity and disaster recovery and host of others, Virtualized servers will demand high I/O performance characteristics from both an transaction and bandwidth perspective. Storage farms will grow, become more sophisticated and more attention will be paid to integrating VMware technology with complex storage technologies such as platform based replication (e.g. – EMC SRDF), snapshot technology (e.g. – EMC Timefinder) and emerging technologies like CDP (Continuos data protection).

A practical example of what I believe has been a lack of education around storage and storage best practice can be proven through the fact that I believe many VMware users are unaware partition offset alignment. Offset alignment is a best practice that absolutely should be followed, this is not a function or responsibility of VMware but it is an often overlooked best practice – (engineers who grew up in the UNIX world and are familiar with a command strings like “sync;sync;sync” typically align partition offsets but admits who grew up in the Windows world I find often overlook offset alignment unless they are very savvy Exchange or SQL performance gurus). Windows users have become accustomed to portioning using disk manager from which it is not possible to align offsets, diskpar must be used to partition and align offsets.

I would be interested in some feedback on how many VMware / Windows users did not do this during their VMware configuration of Windows VM install? Be honest! If you are not using disk par to create partitions and align offsets it means that we need to do a better job educating.

Other notable points from the session:

- <= ESX 2.5.x FC-AL was not supported by VMware, VI3 supports FC-AL.

- VI3 supports 3 outstanding tag command queues per VMDK vs the a single command tag queue which was available in per VMFS in <= ESX 2.5.x – If someone else can verify this it would be great because I have a question mark next to my notes which means I may not have heard it correctly.

Virtulization energy incentive!!!

Wow!!!! A representative from PG&E (Pacific Gas and Electric) just walked out on stage during the general session at world 2006 to announce a program where PG&E customers will see a 300 to 600 dollar energy credit for every physical server they remove from their compute environment by leveraging virtualization. This is truly incredible! The implications of VMware is now capturing the attention of the energy community, all I can say is WOW!! Not only is virtualization a revolution own its own but VMware is acting as a catalyst for the energy revolution. The purpose of the program is to continue their charter of reducing global warming and our dependence on oil. This is a revolution.

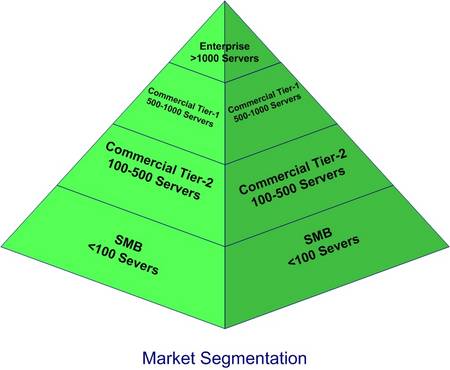

VMworld 2006 – Market Segmentation

This morning Carl Eschenbach, executive vice president of VMware’s worldwide field operations presented to the VMware partner community and he represented what I feel was one of the better representations of marker segmentation. Many IT vendors are segmenting the marketplace by revenue dollars which proves to be difficult because it does not always directly correlate to IT spend which is what are all most interested in. VMware is representing market segmentation by the number installed servers, which I believe functions as a much better barometer of an organizations investment in technology. I quickly recreated the segmentation model for your viewing pleasure.