Almost a year ago I started the process with a group of my peers focused on identifying the infrastructure challenges that organizations are facing today. This process morphed itself over time into a detailed thesis on how we got here and the actions required to close the “Infrastructure Chasm”.

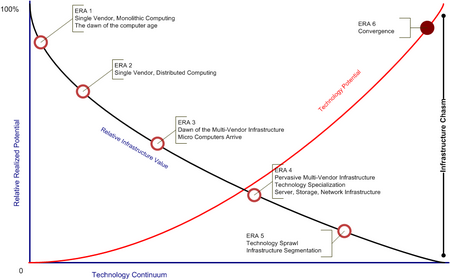

We started the process by identifying how we got to where we are today. The evolution of the modern infrastructure was broken down into six distinct eras:

-

ERA 1

-

Monolithic Computing

-

Single Vendor Infrastructure

-

i.e. – IBM UNIVAC I

-

-

-

ERA 2

-

Distributed Computing

-

Single Vendor Infrastructure

-

i.e. – DEC PDP-8

-

-

-

ERA 3

-

Dawn of the Multi-Vendor Infrastructure

-

Micro computers begin to arrive

-

i.e. – Commodore, Apple, Atari, Tandy, IBM, etc…

-

-

-

ERA 4

-

Technology specialization

-

Focused companies build more specialized devices and software

-

Gives way to companies like Cisco, SUN, Microsoft, Oracle

-

-

Technology interoperability and integration become paramount

-

-

ERA 5

-

Technology Sprawl

-

Infrastructure Segmentation

-

Complexity and required skill sets forces organizations to segment infrastructure and management

-

i.e. – Server, Storage and Network

-

-

-

-

ERA 6

-

Convergence

-

The whole is grater than the sum the parts

-

The ability to leverage knowledge capital across disciplines

-

Increased ROA (Return on Asset)

-

Holist Strategy

-

-

During this exercise we realized that while the potential technology capabilities have increased exponentially it has become more difficult to extract the maximum potential. Many technologies exceed market requirements and complexity forces the need for segmentation making it nearly impossible to develop a holistic strategy. While we continue to increase technology potential our ability to realize the value is diminishing.

This gap between the relative realized potential and potential technology value is what I refer to as the “Infrastructure Chasm”.

The term infrastructure is defined as the set of interconnected structural elements that provide the framework for supporting the entire structure. I propose that information technology infrastructure is more appropriately defined as an umbrella term commonly used to define segmented, heterogeneous, disparate technology components.

Our need to solve tactical information technology infrastructure issues causes us to further propagate a lack of organizational strategic relevance. In order to begin to address this in todays’ mature information technology infrastructure organizations must begin to add strategic relevance to their information technology infrastructure, remove management segmentation and drive convergence.

To begin this process each element of the information technology infrastructure must be dissected and optimized to the fullest extent possible. Once element level optimization is complete we can begin to map the discrete information technology elements into a true converged infrastructure. Once the the convergence process is complete optimization must once again be performed on the infrastructure as a single entity.

At this point you we have forged the “Ultra-Structure”, a paradigm where segmentation and tactical behavior cease to exist, all behavior within the “Ultra-Structure” has strategic relevance and elicits positive business impact.

The “Ultra-Structure” is best defined as a paradigm that shifts conventional technology thinking from reactive point solutions to a holistic strategic foundation.

Why is a paradigm shift so critical? For years organization have been implementing and maintaining tactical infrastructure solutions. The “Ultra-Structure” paradigm provides a model to optimize, architect, design, implement and maintain superior solutions by applying strategic relevance to tactical infrastructure.

The “Ultra-Structure” cannot be compartmentalized or segmented, the intrinsic “Ultra-Structure” value is far greater than the sum of its discrete elements.

I am very interested in your thoughts.

-RJB