So this post is a slightly modified version of some internal documentation that I shared with my management, the folks at Dell who graciously donated the compute, PCIe SSDs and 10 Gig network for this project and the folks at EMC who of course donated the the ScaleIO licensing (and hopefully soon the ViPR 2.0 licensing). Due to the genesis of this post and my all around lack of time for editing some of the writing and tense in this post may not always be logical.

Just about everyone knows that Dell and EMC aren’t exactly best friends these days but could there be a better match for this architecture? Cough, cough, Supermicro, cough, cough Quanta…. but seriously the roll your own Supermicro, Linux, Ceph, Swift, etc… type architecture isn’t for everyone, some people still want reasonably supported hardware and software at pricing that rivals the likes of Supermicro and OSS (Open-source software). BTW, there is a cost to OSS, it’s called your time. Think I need to build a private scale-out architecture, I want it to be lower cost, high performance, support both physical and virtual environments and I want the elasticity and the the ability to scale to the public cloud and oh yeah, I want a support mechanism that is enterprise class for both the hardware and software that I deploy as part of this solution.

Most have heard the proverb “the enemy of my enemy is my friend”, the reality is that Dell and EMC are fenemies whether they know it or not, are willing to admit it or not because I am currently implementing Chapter III in this series and trust me the enemy (competition) is a formidable one, known as the elastic public cloud! Take your pick, AWS, Google, Azure, ElasticHosts, Bitnami, GoGrid, Rackspcae, etc… Will they replace the private cloud, probably not (at least not in the foreseeable future) as there are a number of reasons the private cloud needs to exist and will continue to exist, reasons like regulations, economics, control, etc…

In a rapidly changing landscape where the hardware market is infected with the equivalent of the ebola virus, hemorrhaging interest, value and margin. The sooner we accept this fact and begin to adapt (really adapt) the better our chances of avoiding extinction. Let’s face it there are many OEMs, VARs, individuals, etc… who are more focused on containment rather than a cure. All of us who have who have sold, architected, installed, maintained, etc… traditional IT infrastructure face a very real challenge from a very real threat. The opposing force possess the will and tactics of the Spartans and the might of the Persians, if we (you and I) don’t adapt and think we can continue with business as usual, more focused on containment than curing our own outdated business models we will face a very real problem in the not so distant future. Having said the aforementioned there is no doubt that EMC is hyperfocused on software, much of it new (e.g. – ViPR, ScaleIO, Pivotal, etc…) and many tried and true platforms already instantiated in software or planned to be (e.g. – RecoverPoint, Isilon, etc…). As compute costs continue to plummet more functionality can be supported at the application and OS layers which changes the intelligence needed from vendors. In the IT plumbing space (specifically storage) the dawn of technologies like MS Exchange DAGs and SQL AlwaysOn Availability Groups have been a significant catalyst for the start of a significant shift, the focus has begun to move to features like automation rather than array based replication.

The market is changing fast, we are all scrambling to adapt, figure out how we will add value in the future of tomorrow. I am no different than anyone else, spending my time and money on AWS.

Anyway there is too much to learn and not enough time, I read more than ever on my handheld device (maybe the 5” screen handheld device is a good idea, always thought it was too large). As I work on Chapter II of this series I found myself at dinner the other night with my kids reading documentation on the Fabric documentation. Trying to decide is I should use Fabic to do automate my deployment or just good old shell scripts and the AWSCLI, then my mind started wondering to what do I do after Chapter III, maybe there is a Chapter IV and V with different instance types or maybe I should try Google Compute or Azure, so many choices so little time ![]()

Update: Chapter II and Chapter III of this series already completed and I have actually begun working on Chapter IV.

For sure there will be a ScaleIO and ViPR chapter but I need to wait for ViPR 2.0

This exercise is just my humble effort to become competent in the technologies that will drive the future of enterprise architecture and hopefully say somewhat relevant.

High-level device component list for the demo configuration build:

- Server Hardware (Qty 4):

- Dell PowerEdge R620, Intel Xeon E5-2630v2 2.6GHz Processors, 64 GB of RAM

- PERC H710P Integrated RAID Controller

- 2 x 250GB 7.2K RPM SATA 3Gbps 2.5in Hot-plug Hard Drive

- 175GB Dell PowerEdge Express Flash PCIeSSD Hot-plug

- Networking Hardware:

- Dell Force10 S4810 (10 GigE Producution Server SAN Switch)

- TRENDnet TEG-S16DG (1 GigE Management Switch)

High-level software list:

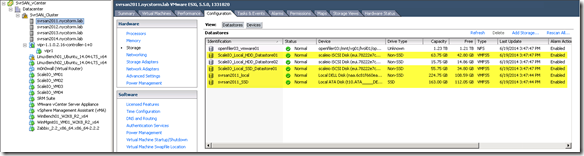

- VMware ESX 5.5.0 build 1331820

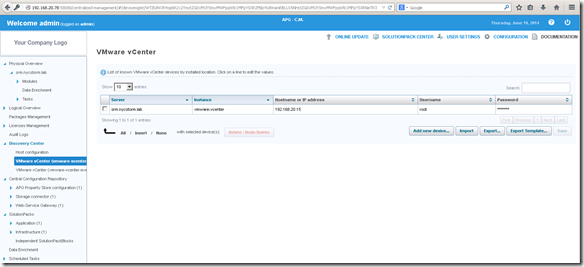

- VMware vCenter Server 5.5.0.10000 Build 1624811

- EMC ScaleIO: ecs-sdc-1.21-0.20, ecs-sds-1.21-0.20, ecs-scsi_target-1.21-0.20, ecs-tb-1.21-0.20, ecs-mdm-1.21-0.20, ecs-callhome-1.21-0.20

- Zabbix 2.2

- EMC ViPR Controller 1.1.0.2.16

- EMC ViPR SRM Suite

- IOzone 3.424

- Ubuntu 14.04 LTS 64 bit (Benchmark Testing VM)

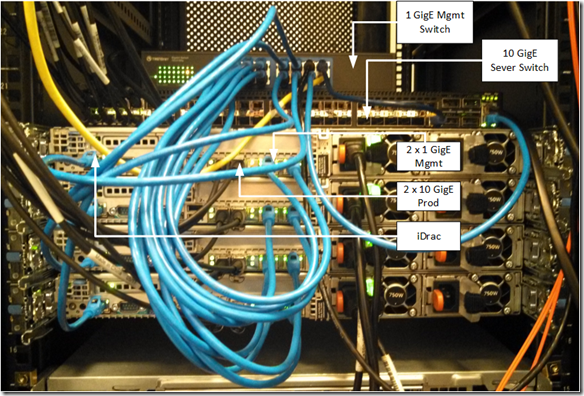

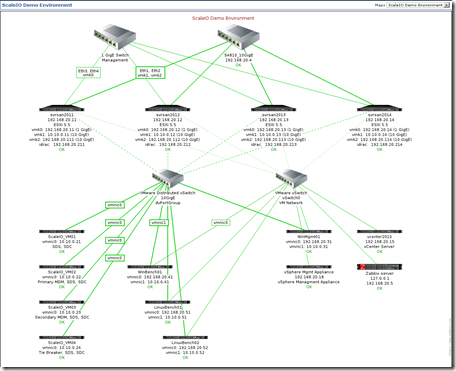

What the configuration physically looks like:

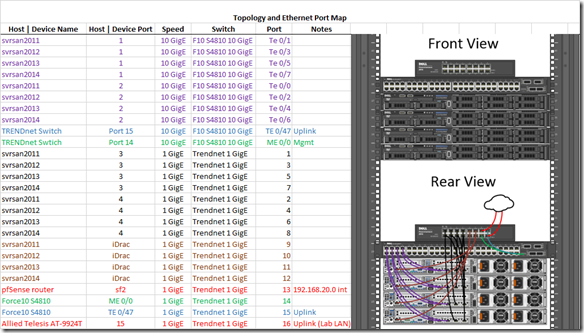

Topology and Layer 1 connections:

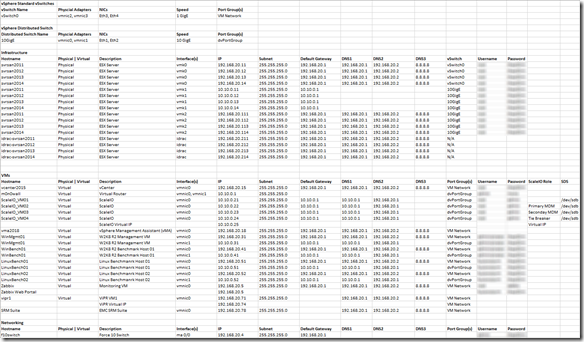

Below are the logical configuration details for the ScaleIO lab environment (less login credentials of course):

Dell Force10 S4810 Config: http://nycstorm.com/nycfiles/repository/rbocchinfuso/ScaleIO_Demo/s4810_show_run.txt

Base ScaleIO Config File: http://nycstorm.com/nycfiles/repository/rbocchinfuso/ScaleIO_Demo/scaleio_config_blog.txt

ScaleIO Commands: http://nycstorm.com/nycfiles/repository/rbocchinfuso/ScaleIO_Demo/scalio_install_cmds_blog.txt

ScaleIO environment is up and running and able to be demoed (by someone who knows the config and ScaleIO because most of the configuration is done via CLI and require some familiarity given the level of documentation at this point)

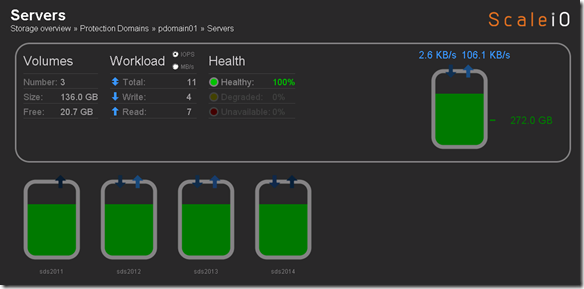

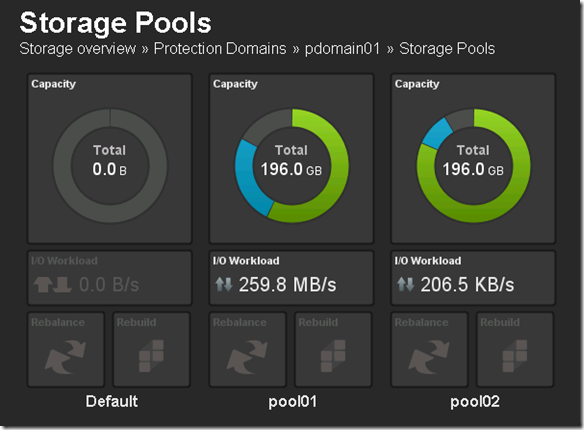

Below you can see that there is 136 GB of available aggregate capacity available across all the ScaleIO nodes (servers).

This is not intended to be a ScaleIO internals deep dive but here is some detail on how the ScaleIO usable capacity is calculated:

Total aggregate capacity across SDS nodes:

- 100/# of SDS servers = % for spare capacity

- 1/2 half of the remaining capacity for mirroring

For example in a ScaleIO cluster with 4 nodes and 10 GB per node the math would be as follows:

- 40 GB of aggregate capacity

- 100/4 = 25% (or 10 GB) for spare capacity

- .5 * 30 GB (remaining capacity) = 15 GB of available/usable capacity

- svrsan201#_SSD – This is the local PCIe SSD on each ESX server (svrsan201#)

- svrsan201#_local – This is the local HDDs on each ESX server (svrsan201#)

- ScaleIO_Local_SSD_Datastore01: The federated ScaleIO SSD volume presented from all four ESX servers (svrsan2011 – 2014)

- ScaleIO_Local_HDD_Datastore01: The federated ScaleIO HDD volume presented from all four ESX servers (svrsan2011 – 2014)

Detailed VMware Configuration Output: http://nycstorm.com/nycfiles/repository/rbocchinfuso/ScaleIO_Demo/ScaleIO_VMware_Env_Details_blog.html

To correlate the above back to the ScaleIO backend configuration the mapping looks like this:

Two (2) configured Storage Pools both in the same Protection Domain

- pool01 is an aggregate of SSD storage from each ScaleIO node (ScaleIO_VM1, ScaleIO_VM2, ScaleIO_VM3 and ScaleIO_VM4)

- pool02 is an aggregate of HDD storage from each ScaleIO node (ScaleIO_VM1, ScaleIO_VM2, ScaleIO_VM3 and ScaleIO_VM4)

Note: Each of the ScaleIO nodes (ScaleIO_VM1, ScaleIO_VM2, ScaleIO_VM3 and ScaleIO_VM4) is tied to a ESX node (ScaleIO_VM1 -> svrsan2011, ScaleIO_VM2 -> svrsan2012, ScaleIO_VM3 -> svrsan2013, ScaleIO_VM4 -> svrsan2014)

Each Storage Pool has configured volumes:

- pool01 had one (1) configured volume of ~ 56 GB. This volume is presented to the ESX servers (svrsan2011, svrsan2012, svrsan2013 & svrsan2014) as ScaleIO_Local_SSD_Datastore01

- pool02 had two (2) configured volumes totaling ~ 80 GB. ScaleIO_Local_HDD_Datastore01 = ~ 60 GB and ScaleIO_Local_HDD_Datastore01 = ~ 16 GB, these to logical volumes share the same physical HDD across the ScaleIO node.

Some Additional ScaleIO implementation Tweaks

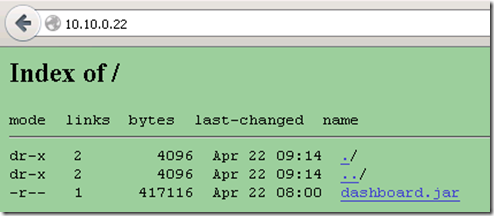

The ScaleIO GUI console seen above is a jar file that needs to be SCPed from the MDM host to your local machine to be run (it lives in /opt/scaleio/ecs/mdm/bin/dashboard.jar). I found this to be a bit arcane so installed thhtp (http://www.acme.com/software/thttpd/) on the MDM server to make it easy to get the dashboard.jar file.

On the MDM server do the following:

- zypper install thttpd

- cd /srv/www/htdocs

- mkdir scaleio

- cd ./scaleio

- cp /opt/scaleio/ecs/mdm/bin/dashboard.jar .

- vi /etc/thttpd.conf

- change www root dir to “/srv/www/htdocs/scaleio”

- restart the thttpd server “/etc/init.d/thttpd restart”

- Now the .jar file can be downloaded using http:\\10.10.0.22\

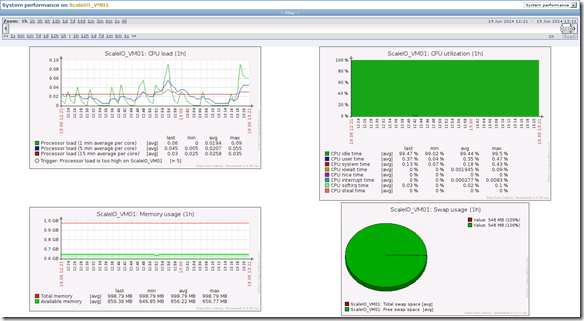

Wanted a way to monitor the health and performance (cpu, mem, link utilization, etc…) of the ScaleIO environment. Including ESX servers, ScaleIO nodes, benchmark test machines, switches, links, etc…

- Deployed Zabbix (http://www.zabbix.com/) to monitor the ScaleIO environment

- Built demo environment topology with active elements

- Health and performance of all ScaleIO nodes, ESX nodes, VMs and infrastructure components (e.g. – switches) can be centrally monitored

Preliminary Performance Testing

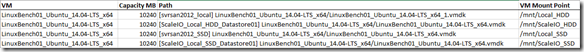

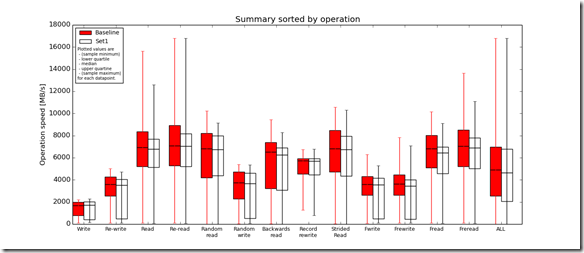

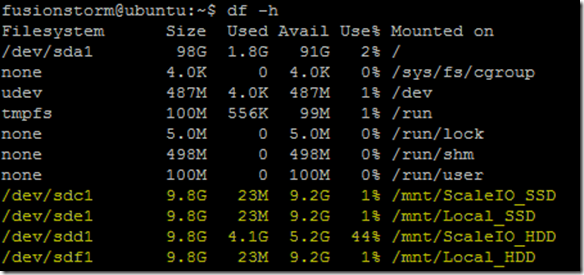

Testing performed using a single Linux VM with the following devices mounted:

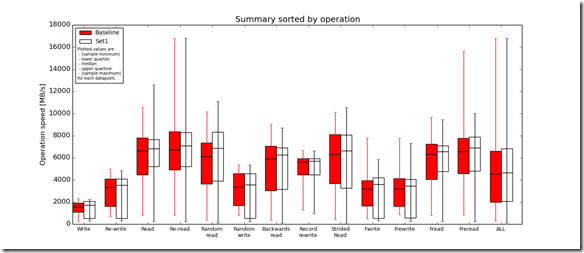

Performance testing was done using IOzone (http://www.iozone.org/) and the results were parsed, aggregated and analyzed using python (http://www.python.org/), R (http://www.r-project.org/), SciPy (http://www.scipy.org/) and Jinja2 (http://jinja.pocoo.org/)

Due to limited time and the desire to capture some quick statistics a single run was made against each device using IOzone using the local HDD and SSD devices for the baseline sample data and the ScaleIO volumes as the comparative data set.

Test 1: Local HDD device vs ScaleIO HDD distributed volume (test performed against /mnt/Local_HDD and /mnt/ScaleIO_HDD, see table above)

- Baseline = Local HDD

- Set1 = ScaleIO HDD

- Complete Local HDD vs ScaleIO HDD distributed volume performance testing analysis output: http://nycstorm.com/nycfiles/repository/rbocchinfuso/ScaleIO_Demo/hdd_benchmark1/index.html

Test 2: Local SSD device vs ScaleIO SSD distributed volume (test performed against /mnt/Local_SSD and /mnt/ScaleIO_SSD, see table above)

- Baseline = Local SSD

- Set1 = ScaleIO SSD

- Complete Local SSD vs ScaleIO SSD distributed volume performance testing analysis output: http://nycstorm.com/nycfiles/repository/rbocchinfuso/ScaleIO_Demo/ssd_benchmark1/index.html

Note: Local (HDD | SSD) = a single device in in a single ESX server, ScaleIO (HDD | SSD) makes used the same HDD and SSD device in the server used in the local test but also all other HDD | SSD devices in other nodes, to provide aggregate capacity, performance and protection.

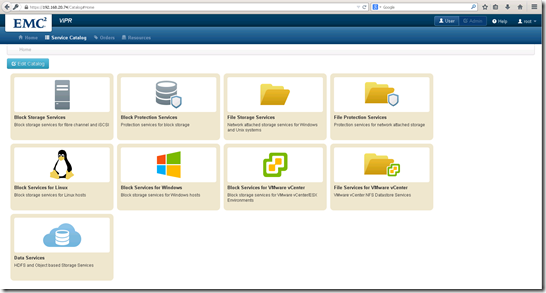

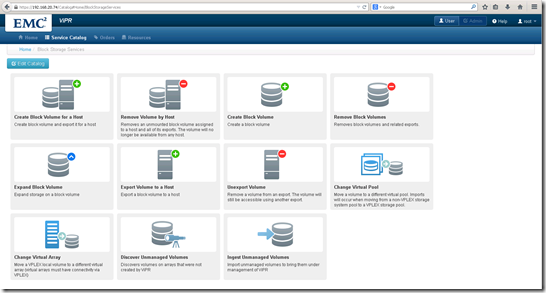

ViPR Installed and Configured

- ViPR is deployed but version 1.1.0.2.16 does not support ScaleIO.

- Note: ScaleIO support will be added in ViPR version 2.0 which is scheduled for release in Q2.

EMC ViPR SRM deployed but haven’t really done anything with it to date.

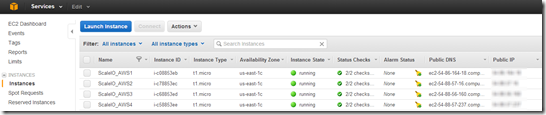

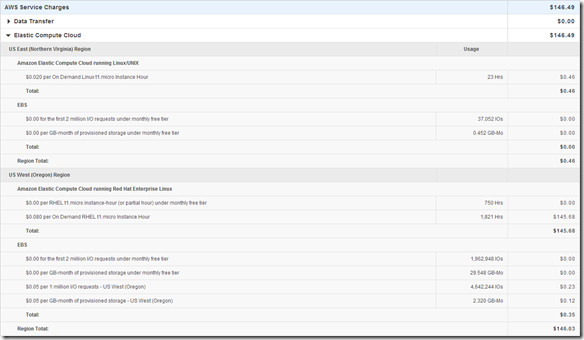

ScaleIO SDS nodes in AWS

- Four (4) AWS RHEL t1.micro instances provisioned and ScaleIO SDS nodes deployed and configured.

- Working with EMC Advanced Software Division to get an unlimited perpetual ScaleIO license so I can add the AWS SDS nodes to the existing ScaleIO configuration as a new pool (pool03).

- Do some testing against the AWS SDS nodes. Scale number of nodes in AWS to see what type or performance I can drive in with t1. micro instances.

Todo list (in no particular order)

- Complete AWS ScaleIO build out and federation with private ScaleIO implementation

- Performance of private cloud compute doing I/O to AWS ScaleIO pool

- Using ScaleIO to migrate between the public and private cloud

- Linear scale in the public and private cloud leveraging ScaleIO

- Complete ViPR SRM configuration

- Comparative benchmarking and implementation comparisons

- ScaleIO EFD pool vs ScaleIO disk pool

- ScaleIO EFD vs SAN EFD

- ScaleIO vs VMware VSAN

- ScaleIO vs Ceph, GlusterFS, FhGFS/BeeGFS whatever other clustered file system I can make time to play with.

- ScaleIO & ViPR vs Ceph & Swift (ViPR 2.0 Required)

- Detailed implementation documentation

- Install and configure

- Management

Progress on all of the above was slower than I had hoped, squeezing in as much as possible in late night and on weekends because 120% of my time is consumed on revenue producing activity.

great start….should be interesting to benchmark vis-à-vis XtremeIO