Khan: “Dreadnought class. Two times the size, three times the speed. Advanced weaponry. Modified for a minimal crew. Unlike most Federation vessels, it’s built solely for combat.”

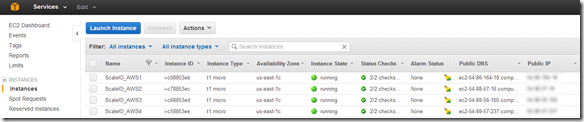

Extending ScaleIO to the public cloud using AWS RHEL 6.5 t1.micro instances and EBS and federating with my private cloud ScaleIO implementation.

This post is about federating ScaleIO across the public and private cloud not the “Federation” of EMC, VMware, Pivotal and RSA” ![]() Sorry but who doesn’t love the “Federation”, if for nothing else it takes me back to my childhood.

Sorry but who doesn’t love the “Federation”, if for nothing else it takes me back to my childhood.

My Childhood:

If you don’t know what the above means and the guy on the right looks a little familiar, maybe from a Priceline commercial don’t worry about it, I just means your part of a different generation (The Next Generation ![]() ). If you are totally clueless about the above you should probably stop reading now, if you can identify with anything above it is probably safe to continue.

). If you are totally clueless about the above you should probably stop reading now, if you can identify with anything above it is probably safe to continue.

My Adulthood:

Wow, the above pictorial actually a scares me a little, I really haven’t come very far ![]()

Anyway let’s get started exploring the next frontier, certainly not the final frontier.

Note: I already deployed the four (4) RHEL 6.5 t1.micro AWS instances that I will be using in this post. This post focuses on the configuration of the instances not the deployment of the AWS instances. In Chapter III of this series I deploy at a larger scale using a AMI image that I generated from ScaleIO_AWS1 which you will see hos to configure in this posts.

Login to AWS RHEL instance via SSH (Note: You will have to setup the required AWS keypairs, etc…)

Note: This link provides details on how to SSH to your AWS Linux instances using a key pair(s): http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/AccessingInstancesLinux.html

Note: I am adding AWS SDS nodes (ScaleIO Data Server NOT Software Defined Storage) to an existing private cloud ScaleIO implementation so this will only cover installing the SDS component and the required steps to add the SDS nodes to the exiting ScaleIO deployment.

ScaleIO Data Server (SDS) – Manages the capacity of a single server and acts as a backend for data access. The SDS is installed on all servers that contribute storage devices to the ScaleIO system. These devices are accessed through the SDS.

Below is a what the current private cloud ScaleIO deployment looks like:

The goal here is to create pool03 which will be a tier of storage that will reside in AWS.

Once logged into your AWS RHEL instance validate that the following packages are installed: numactl and libaio

- #sudo –s

- #yum install libaio

- #yum install numactl

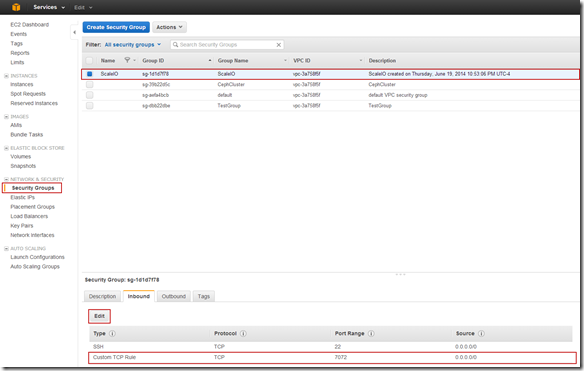

For SDS nodes port 7072 needs to opened. Because I have a the ScaleIO security group I can make the change in the Security Group.

Note: This is an environment that is only for testing, there is nothing here that I care about, the data, VMs, etc… are all disposable this opening port 7072 to the public IP is of no concern to me. In an actual implementation there would likely be a VPN between the public and private infrastructure components and there would not be a need to open port 7072 on the public IP address.

AWS SDS node reference CSV:

IP,Password,Operating System,Is MDM/TB,MDM NIC,Is SDS,is SDC,SDS Name,Domain,SDS Device List,SDS Pool List

#.#.#.#,********,linux,No,,Yes,No,aws1sds,awspdomain1,/dev/xvdf,pool03

#.#.#.#,********,linux,No,,Yes,No,aws2sds,awspdomain1,/dev/xvdf,pool03

#.#.#.#,********,linux,No,,Yes,No,aws3sds,awspdomain1,/dev/xvdf,pool03

#.#.#.#,********,linux,No,,Yes,No,aws4sds,awspdomain1,/dev/xvdf,pool03

Copy the SDS rpm from the AWS1 node to the other 3 nodes:

- scp /opt/scaleio/siinstall/ECS/packages/ecs-sds-1.21-0.20.el6.x86_64.rpm root@#.#.#.#:~

- scp /opt/scaleio/siinstall/ECS/packages/ecs-sds-1.21-0.20.el6.x86_64.rpm root@#.#.#.#:~

- scp /opt/scaleio/siinstall/ECS/packages/ecs-sds-1.21-0.20.el6.x86_64.rpm root@#.#.#.#:~

Note: I copied the ECS (ScaleIO) install files from my desktop to to AWS1 so that is why the rpm is only being copied to AWS2,3 & 4 above.

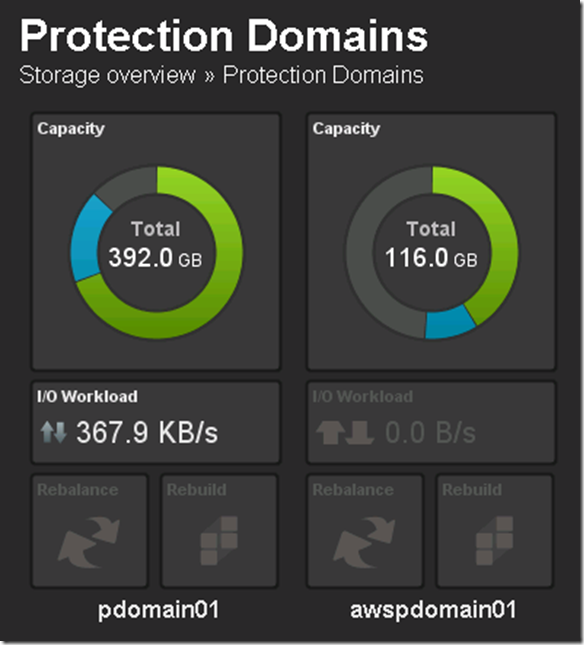

Add AWS Protection Domain:

- scli –mdm_ip 10.10.0.25 –add_protection_domain –protection_domain_name awspdomain01

Protection Domain – A Protection Domain is a subset of SDSs. Each SDS belongs to one (and only one) Protection Domain. Thus, by definition, each Protection Domain is a unique set of SDSs.

Add Storage Pool to AWS Protection Domain:

- scli –mdm_ip 10.10.0.25 –add_storage_pool –protection_domain_name awspdomain01 –storage_pool_name pool03

Storage Pool – A Storage Pool is a subset of physical storage devices in a Protection Domain. Each storage device belongs to one (and only one) Storage Pool. A volume is distributed over all devices residing in the same Storage Pool. This allows more than one failure in the system without losing data. Since a Storage Pool can withstand the loss of one of its members, having two failures in two different Storage Pools will not cause data loss.

Add SDS to Protection Domain and Pool:

- scli –mdm_ip 10.10.0.25 –add_sds –sds_ip 54.86.164.18 –protection_domain_name awspdomain01 –device_name /dev/xvdf –storage_pool_name pool03 –sds_name aws1sds

- scli –mdm_ip 10.10.0.25 –add_sds –sds_ip 54.88.57.16 –protection_domain_name awspdomain01 –device_name /dev/xvdf –storage_pool_name pool03 –sds_name aws2sds

- scli –mdm_ip 10.10.0.25 –add_sds –sds_ip 54.88.56.160 –protection_domain_name awspdomain01 –device_name /dev/xvdf –storage_pool_name pool03 –sds_name aws3sds

- scli –mdm_ip 10.10.0.25 –add_sds –sds_ip 54.88.57.237 –protection_domain_name awspdomain01 –device_name /dev/xvdf –storage_pool_name pool03 –sds_name aws4sds

Create 20 GB volume:

- scli –mdm_ip 10.10.0.25 –add_volume –protection_domain_name awspdomain01 –storage_pool_name pool03 –size 20 –volume_name awsvol01

Some other relevant commands:

- scli –mdm_ip 10.10.0.25 –remove_sds –sds_name aws1sds

- scli –mdm_ip 10.10.0.25 –sds –query_all_sds

- scli –mdm_ip 10.10.0.25 –query_storage_pool –protection_domain_name awspdomain01 –storage_pool_name pool03

Note: I always use the –mdm_ip switch that way I don’t have to worry where I am running the commands from.

Meta Data Manager (MDM) – Configures and monitors the ScaleIO system. The MDM can be configured in a redundant Cluster Mode with three members on three servers, or in a Single Mode on a single server.

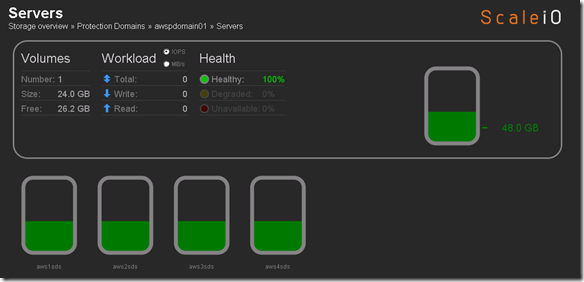

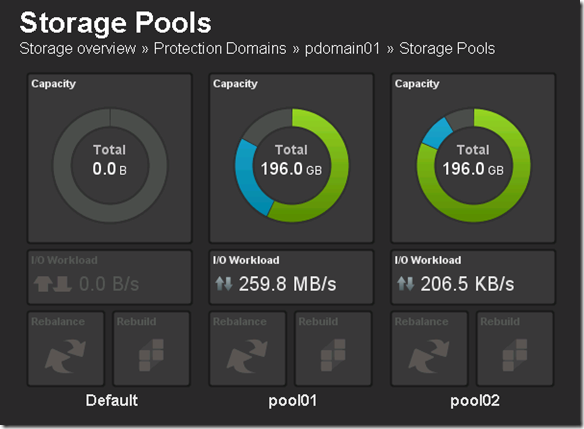

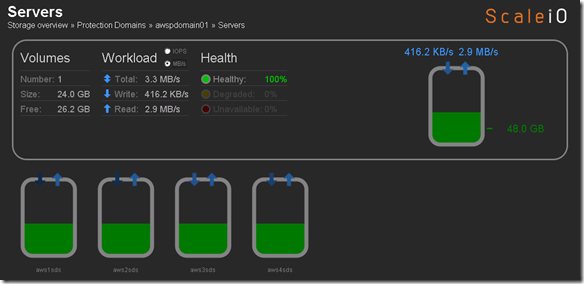

ScaleIO Deployed in AWS and federated with private cloud ScaleIO deployment

My ScaleIO (ECS) implementation now has 3 tiers of storage:

- Tier 1 (Local SSD) = pdomain01, pool1

- Tier 2 (Local HDD) = pdomain01, pool2

- Tier 3 (AWS HDD) = awspdomain01, pool3

Map AWS volume to local SDCs:

- scli –mdm_ip 10.10.0.25 –map_volume_to_sdc –volume_name awsvol01 –sdc_ip 10.10.0.21

- scli –mdm_ip 10.10.0.25 –map_volume_to_sdc –volume_name awsvol01 –sdc_ip 10.10.0.22

- scli –mdm_ip 10.10.0.25 –map_volume_to_sdc –volume_name awsvol01 –sdc_ip 10.10.0.23

- scli –mdm_ip 10.10.0.25 –map_volume_to_sdc –volume_name awsvol01 –sdc_ip 10.10.0.24

ScaleIO Data Client (SDC) – A lightweight device driver that exposes ScaleIO volumes as block devices to the application residing on the same server on which the SDC is installed.

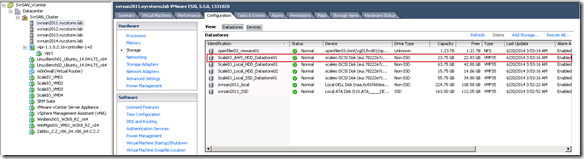

Map AWS volume to ESX initiators:

- scli –mdm_ip 10.10.0.25 –map_volume_to_scsi_initiator –volume_name awsvol01 –initiator_name svrsan2011

- scli –mdm_ip 10.10.0.25 –map_volume_to_scsi_initiator –volume_name awsvol01 –initiator_name svrsan2012

- scli –mdm_ip 10.10.0.25 –map_volume_to_scsi_initiator –volume_name awsvol01 –initiator_name svrsan2013

- scli –mdm_ip 10.10.0.25 –map_volume_to_scsi_initiator –volume_name awsvol01 –initiator_name svrsan2014

Note: I alreadt created the SCSI initiators and named them this is NOT documented in this post. I plan to craft a A to Z how-to when I get some time.

AWS ScaleIO Datastore now available in VMware (of course there are some steps here, rescan, format, etc…)

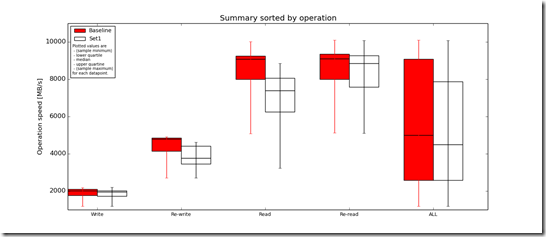

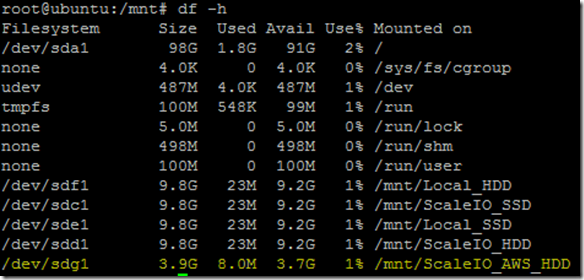

Figured I would do some I/O just for giggles (I am sure it will be very slow, using t1-micro instance and not at scale):

- Add a 4GB volume (vmdk, thick eager zeroed) to my Linux test host

- partition, format and mount the AWS device

- Do some preliminary testing using FIO (http://git.kernel.dk/?p=fio.git;a=summary) and FFSB (http://sourceforge.net/projects/ffsb/)

Screenshot below is activity while running I/O load to the AWS volume:

Important to note that the public / private ScaleIO federation was PoC just to see how it could / would be done. It was not intended to be a performance exercise but rather a functional exercise. Functionally things worked well, the plan is now to scale up the number and type of nodes to see what type of performance I can get from this configuration. Hopefully no one will push back on my AWS expenses 🙂

I did some quick testing with FFSB and FIO, after seeing the results returned by both FFSB and FIO i wanted to grab some additional data so I could do a brief analysis so ran IOzone (http://www.iozone.org/) against the AWS ScaleIO volume (awspdomain01, pool03) and the local ScaleIO HDD volume (pdomain01, pool02) for comparison.

IOzone Results (very preliminary):

Preliminary ScaleIO Local HDD vs ScaleIO AWS HDD distributed volume performance testing analysis output: http://nycstorm.com/nycfiles/repository/rbocchinfuso/ScaleIO_Demo/aws_benchmark/index.html

Considering that i only have 4 x t1.micro instances which are very limited in terms of IOPs and bandwidth the above is not that bad.

Next steps:

- Automate the creation of AWS t1.micro instances and deployment of SDS nodes

- Additional performance testing

- Add AWS nodes to Zabbix (http://www.zabbix.com/)

I am interested in seeing what I can do as I scale up the AWS configuration. Stay tuned.