This blog is a high level overview of some extensive testing conducted on the EMC (CLARiiON) CX3-80 with 15K RPM FC (fibre channel disk) and the EMC (CLARiiON) CX4-120 with EFD (Enterprise Flash Drives) formerly know as SSD (solid state disk).

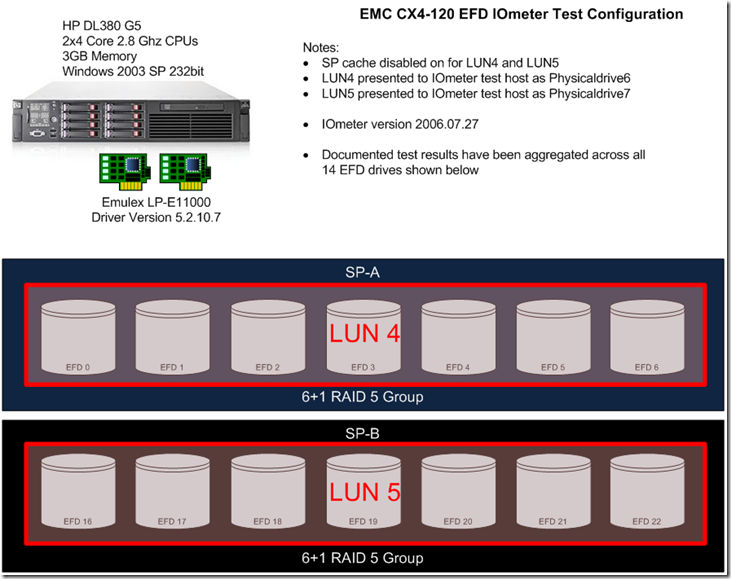

Figure 1: CX4-120 with EFD test configuration.

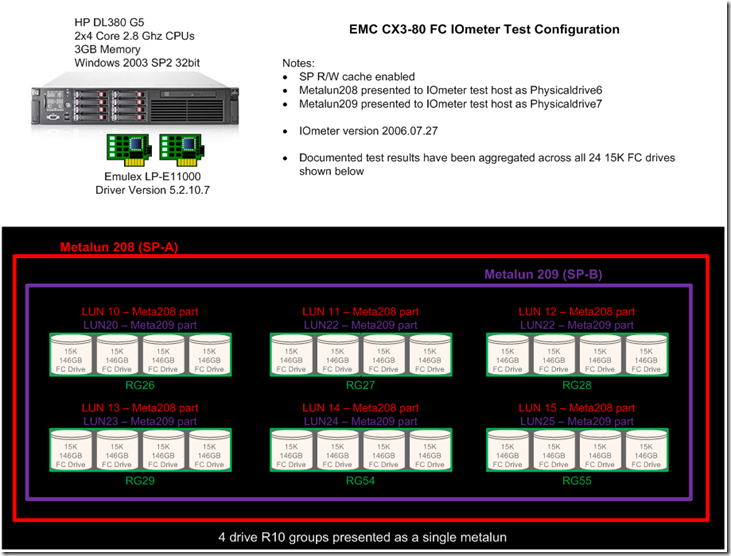

Figure 2: CX3-80 with 15K RPM FC rest configuration.

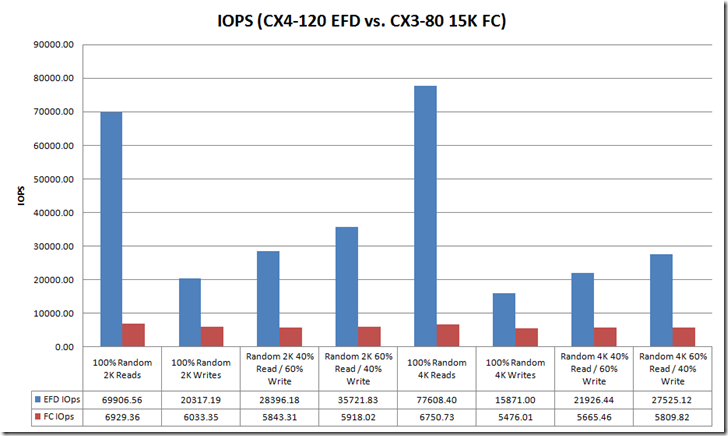

Figure 3: IOPs Comparison

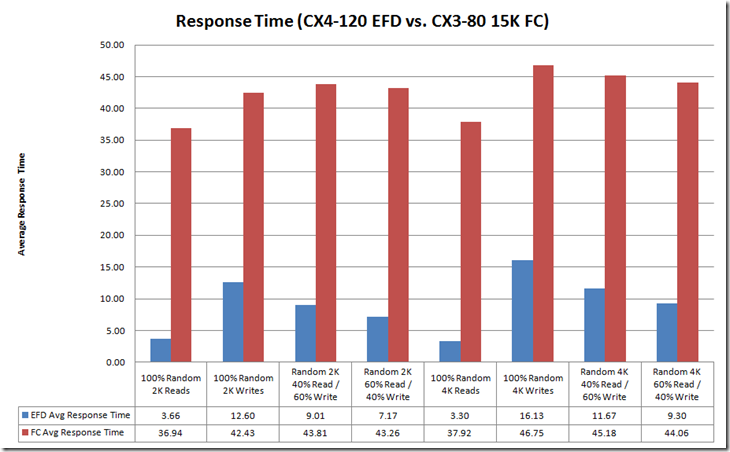

Figure 4: Response Time

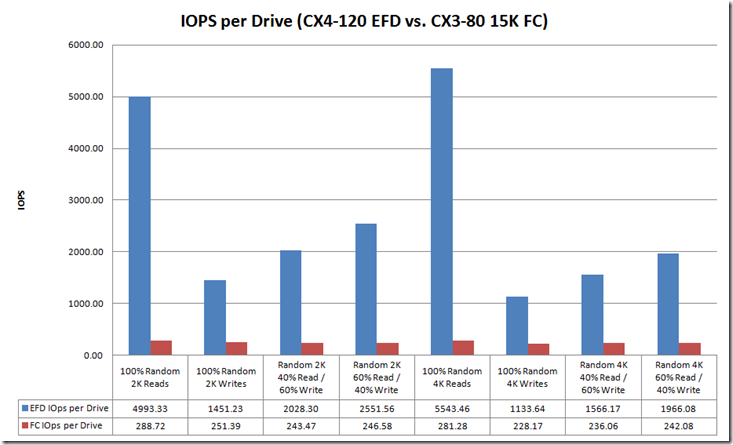

Figure 5: IOPs Per Drive

Notice that the CX3-80 15K FC drives are servicing ~ 250 IOPs per drive, this exceeds 180 IOPs per drive (the theoretical maximum for a 15K FC drive is 180 IOPs) this is due to write caching. Note that cache is disabled for the CX4-120 EFD tests, this is important because high write I/O load can cause something known as a force cache flushes which can dramatically impact the overall performance of the array. Because cache is disabled on EFD LUNs forced cache flushes are not a concern.

Table below provides a summary of the test configuration and findings:

| Array | CX3-80 | CX4-120 |

| Configuration | (24) 15K FC Drives | (7) EFD Drives |

| Cache | Enabled | Disabled |

| Footprint | ~42% drive footprint reduction | |

| Sustained Random Read Performance | ~12x increase over 15K FC | |

| Sustained Random Write Performance | ~5x increase over 15K FC |

In summary, EFD is a game changing technology. There is no doubt that for small block random read and write workloads (i.e. – Exchange, MS SQL, Oracle, etc…) EFD dramatically improves performance and reduces the risk of performance issues.

This post is intended to be an overview of the exhaustive testing that was performed. I have results with a wide range of transfer sizes beyond the 2k and 4k results shown in this posts, I also have Jetstress results. If you are interested in data that you don’t see in this post please Email me a rbocchinfuso@gmail.com.

good job, a brief informative and understandable

Pingback: Data Storage for VDI – Part 10 – Megacaches « Storage Without Borders