My inspiration

My family and I use our garage as the primary method of ingress and egress from our home. Almost daily I open the garage door using the standard wall mount garage door button, I also drive away and 30 seconds later I think to myself “did I close the garage door”. The thought of “did I close the garage door” results in one of two outcomes.

- I am close enough that I can strain my neck and look back over my left shoulder to see if I closed the door.

- I went in a direction that does not allow me to look back over my left shoulder to see the state of the door or I am too far to see the state of the door, this results in me turning around and heading home to appease my curiosity.

My Goal(s)

Initial goal: To implement a device that allowed me to remotely check the state of my garage door and change the state of the door remotely (over the internet). Pretty simple.

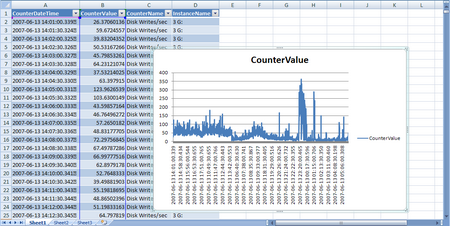

Implemented analytics add-on: Given the intelligence of the device I was building and deploying to gather door state and facilitate state changes (open | closed) I thought wouldn’t it be cool if I captured this data and did some analytics. E.g. – When is the door opened and closed, how long is it kept in this state and started to infer some behavioral patters. I implemented Rev 1 of this which I will talk about below.

Planned add-ons:

- Camera with motion capture and real-time streaming.

Note: Parts for this project on order and I will detail my implementation as an update to this post once I have it completed. - Amazon Alexa (Echo) (http://goo.gl/P3uNY6) voice control.

- 3D printed mounting bracket (bottom of priority list)

My First Approach

Note: This only addressed my initial design goal above. Another reason I am glad I bagged the off-the-shelf approach and went with the maker approach.

I have automated most of my home with an ISY99i (https://goo.gl/YOklKH) and my thought was I could easily leverage the INSTEON 74551 Garage Door Control and Status Kit (http://goo.gl/Soo31V). To make a long story short this device is a PoS so it became an AMZN return. After doing more research on what was available off-the-shelf and aligning it with my goals I decided that I should build rather than buy.

The Build

Parts list:

Note: Many of these parts can be changed out for similar versions.

- 1 x Raspberry Pi B+ (https://www.raspberrypi.org/products/model-b-plus/)

- 1 x Kingston 8 GB microSDHC Class 4 Flash Memory Card SDC4/8GB (http://goo.gl/8e5ELJ)

- 1 x UCS 802.11 Wireless Adapter / Dongle (The one I am using: http://goo.gl/mIYhWy)

- 1 x Micro USB Power Supply (The one I am using: http://goo.gl/yckjHW)

- 2 x 10 Ohm resistors (http://goo.gl/XFafSl)

- 1 x 2 Channel 5v Relay (http://goo.gl/3J3Kkp)

- 1 x Magnetic Contact Switch / Door Sensor (https://www.adafruit.com/products/375)

- Various wire

- Some suggested purchases

- sunkee 100pcs 1p to 1p female to female jumper wire Dupont cable for Arduino 20cm (http://goo.gl/pl56lP)

- 50 PCS Jumper Wires Premium 200mm M/F Male-to-Female (http://goo.gl/kIww79)

- Some suggested purchases

- Other notable items:

- Cardboard – You will notice my fancy mounting system in the post installation photos below.

Note: From Amazon box so technically Amazon provided this as well. I may 3D print a mount at some point in the the future, if I do I will update post with CAD file. - Zip ties (http://goo.gl/4ecElP)

- Small polymer screws (http://goo.gl/U0Eau2)

- Fork Terminal Sta-Kons (http://goo.gl/A0tdMJ)– Always make connections a little nicer (used these on the wires connected to the back of the garage door opener, you will see in the post implementation photos below).

- Heat shrink (http://goo.gl/EYovo5)

- Cardboard – You will notice my fancy mounting system in the post installation photos below.

Various tools required / used:

- Wire cutter / stripper

- Various screwdrivers

- Whatever you need to make connections on you garage door opener.

- Tiny slotted screwdriver (required to tighten terminals on relay board).

- Soldering iron

- If you don’t have a soldering iron you could always go with a solderless breadboard (http://goo.gl/v48m6M) or butt splice Sta-Kons (http://goo.gl/oHauzF) approach.

- Heat gun (required for heat shrink)

- Substitute a good hair dryer or lighter (be careful with lighter not to melt wires).

Planned camera add-on:

Note: Parts ordered but have not yet arrived and this is not yet implemented.

- 1 x Arducam 5 Megapixels 1080p Sensor OV5647 Mini Camera Video Module for Raspberry Pi Model A/B/B+ and Raspberry Pi 2 (http://goo.gl/XhCy5L)

- 1 x White ScorPi B+, Camera Mount for your Raspberry Pi Model B+ and white Camlot, Camera leather cover (http://goo.gl/nRHvED)

Note: Nice to have, certainly not required.

Although below you will see my breadboard design I actually soldered and protected all final connections with heat shrink (you will see this in my post installation photos below).

Required Software

Note: This is not a Raspberry Pi tutorial so I am going to try to keep the installation and configuration of Raspbian and other software dependencies limited to the essentials but with enough details and reference material to make getting up and running possible.

Gather requisite software and preparing to boot:

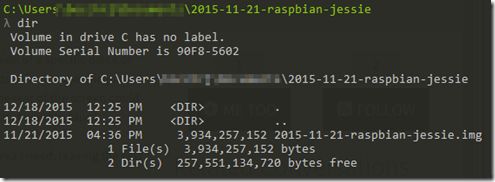

- Download Respbian Jessie OS (https://goo.gl/BYkhLp)

- Download Win32 Disk Imager (http://goo.gl/hz9BD)

Note: This is how you will write the Raspbian Image to your 8 GB microSDHC Class 4 Flash Memory Card.

Note: If you are not using Windows then Win32 Disk Imager is not an option.- For Linux use “dd” to write the image to your 8 GB microSDHC Class 4 Flash Memory Card. (https://goo.gl/GWLKqx)

- For MAC “dd” can be used as well. (https://goo.gl/uvvsXG)

- Unzip the Raspbian Jessie Image and Write Image (,img file) to 8 GB microSDHC Class 4 Flash Memory Card.

- Insert 8 GB microSDHC Class 4 Flash Memory Card into computer open Win32 Disk Imager and write Raspbian image to microSD card (in this case drive G:)

- Once complete eject the microSD card from your computer and insert it into your Raspberry Pi.

- At this time also insert your USB wireless dongle.

We are now ready to boot our Raspberry Pi for the first time bur prior to doing so we need to determine how we will connect to the console. There are two options here.

- Using a HDMI connected monitor with a USB wired or wireless keyboard.

- Using a Serial Console (http://elinux.org/RPi_Serial_Connection)

Pick your preferred console access method from the above two options and connect and then power on the the Raspberry Pi by providing power to the the micro USB port.

Booting the raspberry Pi for the first time:

- Default Username / Password: pi / raspberry

- Once the system is booted login via the console using the default Username and Password above.

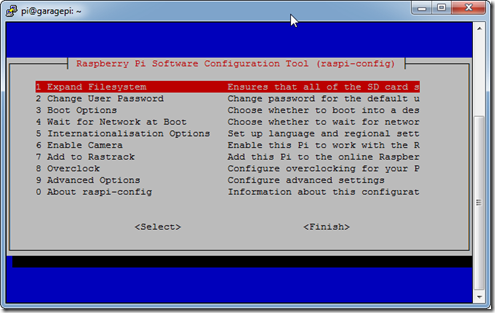

- Perform the first time configuration by executing “sudo raspi-config”

- At this point you are going to run options 1,2,3 and 9 then reboot.

- Option 1 and 2 are self explanatory.

- Option 1 expands the root file system to make use of your entire SD card.

- Option 2 allows you to change the default password for the “pi” user

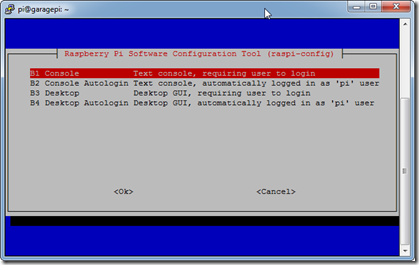

- Using option 3 we will tell the Raspberry Pi to boot to the console (init level 3).

- Select option B1 and then OK.

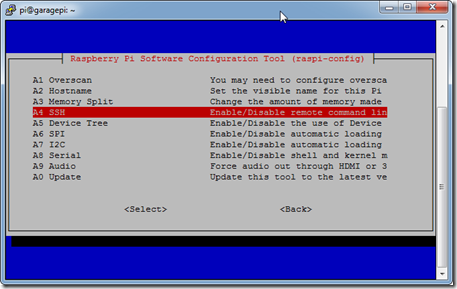

- Next select option 9, then A4 and enable SSH.

- Select Finish and Reboot

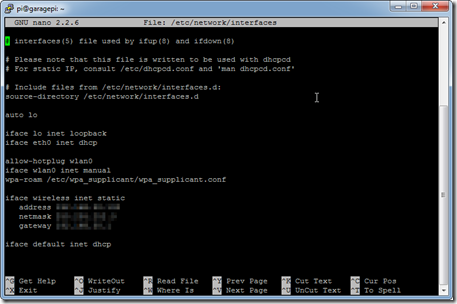

Once the system reboots it is time to configure the wireless networking.

Note: I will used nano for editing, little easier for those not familiar with vi but vi can also be used.

- Once the system is booted login via the console using the “pi” user and whatever you set the password to.

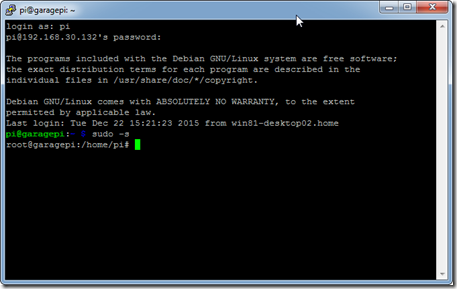

- Enter: “sudo –s” (this will elevate us to root so we don’t have to preface every command with “sudo”)

- To setup wireless networking we will need to edit the following files:

- /etc/wpa_supplicant/wpa_supplicant.conf

- /etc/network/interfaces

- /etc/hostname

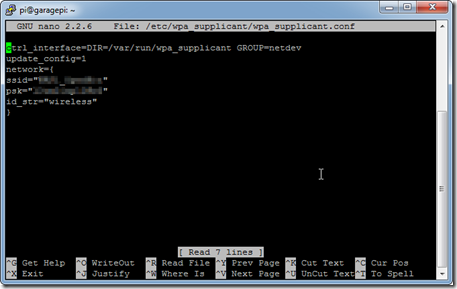

- nano /etc/wpa_supplicant/wpa_supplicant.conf

You will likely have to add the following section:network={

ssid=”YOURSSID”

psk=”YOURWIRELESSKEY”

id_str=”wireless”

} - nano /etc/network/interfaces

You will likely have to edit/add the following section:

allow-hotplug wlan0

iface wlan0 inet manual

wpa-roam /etc/wpa_supplicant/wpa_supplicant.confiface wireless inet static

address [YOURIPADDRESS]

netmask [YOUSUBNET]

gateway [YOURDEFAULTGW] - nano /etc/hostname

- Set your hostname to whatever you like, you will see I call mine “garagepi”

- reboot

- Once the reboot is complete the wireless networking should be working, you should be able to ping your static IP and ssh to your Raspberry Pi as user “pi”.

Raspberry Pi updates and software installs

Now that our Raspberry Pi is booted and on the network let start installing the required software.

- ssh to the Rasberry Pi using your static IP or DNS resolvable hostname.

- On Windows you can use PuTTY (http://www.chiark.greenend.org.uk/~sgtatham/putty/download.html) to ssh. On Linux or MAC just open a terminal session and type “ssh pi@IPADDRESS”.

- Login as “pi”

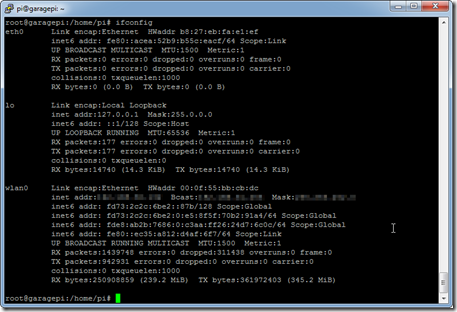

- Check that networking looks good by executing “ifconfig”

Note: It’s going to look good otherwise you would not have been able to ssh to the host but if you need to check from console issuing a “ifconfig” would be a good starting place. If you are having issues consult Google on Raspberry Pi wireless networking.

- Update Raspbian

- sudo apt-get update

- sudo apt-get upgrade

- Additional software installs

- sudo apt-get –y install git

- sudo gem install gist

- sudo apt-get –y install python-dev

- sudo apt-get –y install python-rpi.gpio

- sudo apt-get –y install curl

- sudo apt-get –y install dos2unix

- sudo apt-get –y install daemon

- sudo apt-get –y install htop

- sudo apt-get –y install vim

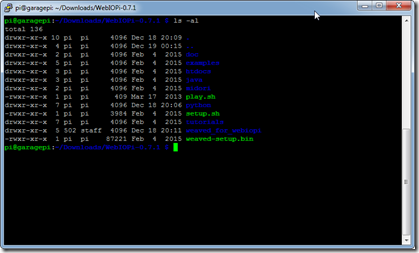

- Install WebIOPi (http://webiopi.trouch.com/)

- wget http://sourceforge.net/projects/webiopi/files/WebIOPi-0.7.1.tar.gz/download

- tar zxvf WebIOPi-0.7.1.tar.gz

- cd WebIOPi-0.7.1

Note: You may need to chmod –R 755 ./WebIOPi-0.7.1 - sudo ./setup.sh

- Follow prompts

Note: Setting up a Weaved account not required. I suggest doing it just to play with Weaved (https://www.weaved.com/) but I just use dynamic DNS and port forwarding for remote access to the device. I will explain this more later in the post.

- Follow prompts

- sudo update-rc.d webiopi defaults

- sudo reboot

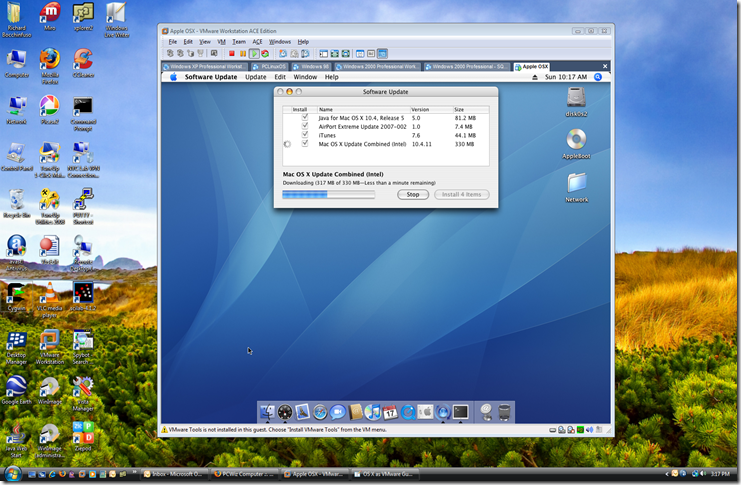

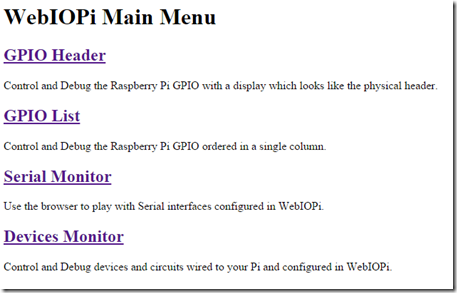

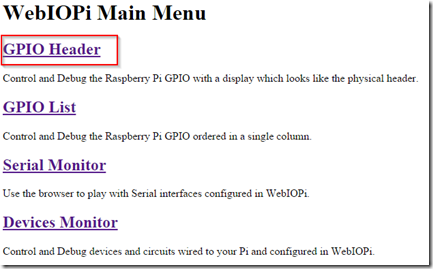

Once the system reports WebIOPi should be successfully installed and running. To test WebIOPi open your browser and open the following URL: http://YOURIPADDRESS:8000

- You should get a HTTP login prompt.

- Login with the default username / password: webiopi / raspberry

- If everything is working you should see the following:

We now have all the software installed that will enable us to get status and control our garage door. We are not going to prep for the system for the analytics aspect of the project.

- Create an Initial State account.

- Once your account is created, login in and navigate to “my account” by clicking your account name in the upper right hand corner of the screen and selecting “my account”

- Scroll to the bottom of the page and make note of or create a “Streaming Access Key”

- As “pi” user from Raspberry Pi ssh session run the following command: \curl -sSL https://get.initialstate.com/python -o – | sudo bash

Note: Be sure to include the leading “\”- Follow the prompts – if you say “Y” to the “Create an example script?” prompt, then you can designate where you’d like the script and what you’d like to name it. Your Initial State username and password will also be requested so that it can autofill your Access Key. If you say “n” then a script won’t be created, but the streamer will be ready for use.

OK all of of software prerequisites are done, let’s get our hardware built!

Shutdown and unplug the Raspberry Pi

The Hardware Build

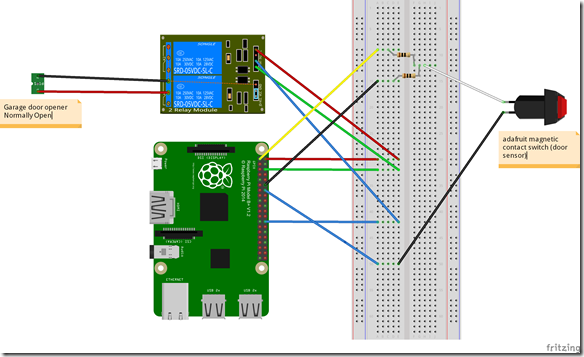

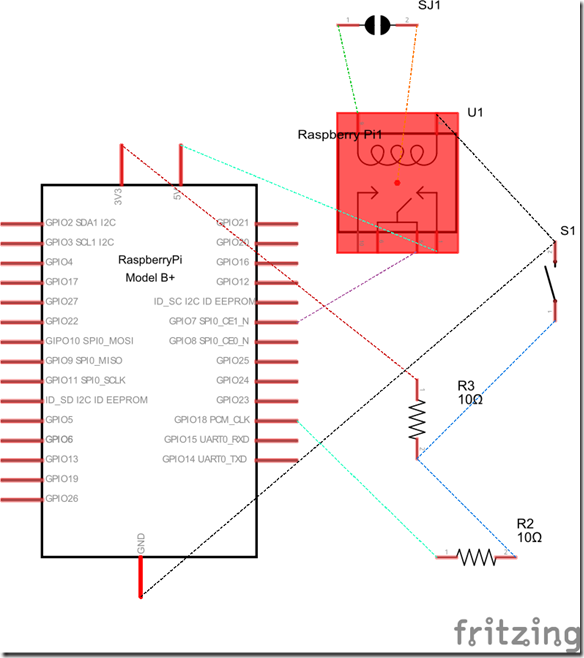

Note: I used fritzing (http://fritzing.org/home/) to prototype my wiring and design. This is not required but as you can see below it does a nice job with documenting your project and also allowing you to visualize circuits prior to doing the physical soldering. I did not physically breadboard the design, I used fritzing instead.

Breadboard Prototype

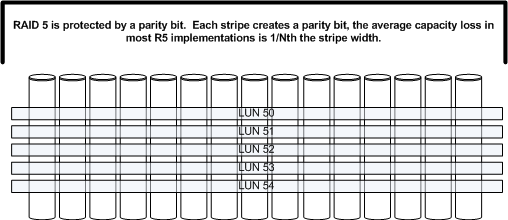

Connections are as follow:

- Pin 2 (5v) to VCC on 2 Channel 5v Relay

- Pin 6 (ground) to GND on 2 Channel 5v Relay

- Pin 26 (GPIO 7) tom IN1 on 2 Channel 5v Relay

- Pin 1 (3.3v) to 10k Ohm resistor to Common on Magnetic Contact Switch / Door Sensor

- Pin 12 (GPIO 18) to 10k Ohm resistor to Common on Magnetic Contact Switch / Door Sensor

Note: Make sure you include the 10k Ohm resistors otherwise there will be a floating GPIO status. - Pin 14 (ground) to Normally Open on Magnetic Contact Switch / Door Sensor

Schematic

Before mounting the device and connecting the device to our garage door (our final step) let’s do some preliminary testing.

- Power on the Raspberry Pi

- ssh to the Rasberry Pi using your static IP or DNS resolvable hostname.

- Lognin as “pi”

- Open your browser and open the following URL: http://YOURIPADDRESS:8000

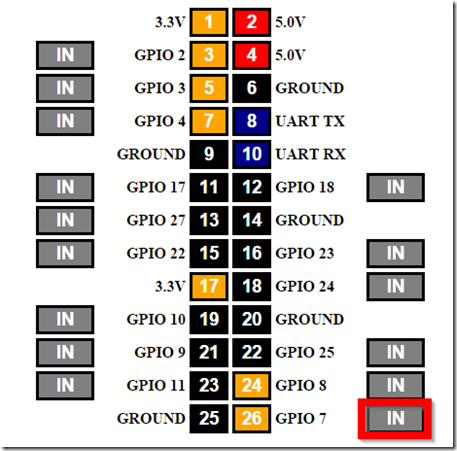

- Click on “GPIO Header”

- Click on the “IN” button next to Pin 26 (GPIO 7) (big red box around it below)

- You should hear the relay click and the LED on the relay should illuminate.

- If this works you are in GREAT shape, if not you need to troubleshoot before proceeding.

Connect the relay to proper terminal on your garage door opener.

Note: Garage door openers can be a little different so my connection may not exactly match your connections. The relay is just closing the circuit just like your traditional garage door opener button.

As I mentioned above I soldered all my connections and protected them with heat shrink but there are lots of other ways to accomplish this which I talked about earlier.

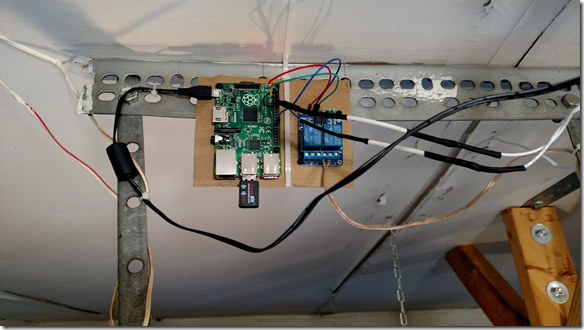

Finished Product (post installation photos)

Above you can see the wires coming from the relay (gold colored speaker wire on the right, good gauge for this application and what I had laying around)

Below you can see the connections to the two leftmost terminals on the garage door opener (I’m a fan of sta-kons to keep things neat)

OK, now that our hardware device is ready to go and connected to our garage door opener let’s power it up.

Once the system is powered up let’s login as pi and download the source code to make everything work.

- ssh to your Raspberry Pi

- login as “pi”

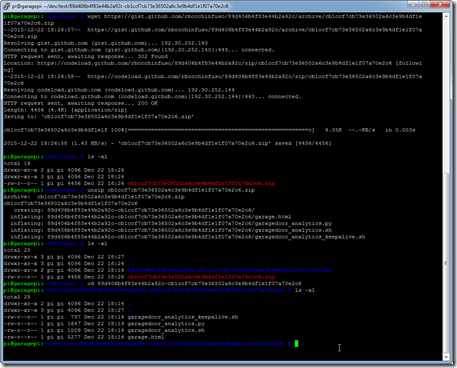

- wget https://gist.github.com/rbocchinfuso/89d406b4f83e44b2a92c/archive/cb1ccf7cb73e36502a6c3e9b4df1e1f07a70e2c6.zip

- unzip cb1ccf7cb73e36502a6c3e9b4df1e1f07a70e2c6.zip

- cd 89d406b4f83e44b2a92c-cb1ccf7cb73e36502a6c3e9b4df1e1f07a70e2c6

Next we need to put the files in their appropriate locations. There are no rules here but you may need to modify the source a bit if you make changes to the locations.

- mkdir /usr/share/webiopi/htdocs/garage

- cp ./garage.html /usr/share/webiopi/htdocs/garage

Note: garage.hrtml can also be places in /usr/share/webiopi/htdocs and you will not need to include /garage/ in the URL. I use directories because I am serving multiple apps for this Raspberry Pi. - mkdir ~pi/daemon

- cp garagedoor_analytics.py ~/pi/daemon

Note: You will need to edit this file to enter your Initial State Access Key which we made note of earlier. - cp garagedoor_analytics.sh ~/pi/daemon

- cp garagedoor_analytics_keepalive.sh ~/pi/daemon

Make required crontab entries:

- sudo crontab –e

Note: This edits the root crontab - You should see a line that looks like the following:

#@reboot /usr/bin/startweaved.sh

This is required to use Weaved. If you remember earlier I said I just use port forwarding so I don’t need Weaved so I commented this out in my final crontab file. - Here is what my root crontab entries look like:

#@reboot /usr/bin/startweaved.sh

@reboot /home/pi/daemon/garagedoor_analytics_keepalive.sh

*/5 * * * * /home/pi/daemon/garagedoor_analytics_keepalive.sh

*** IMPORTANT *** Change the WebIOPi server password.

- sudo webiopi-passwd

Reboot the Raspberry Pi (sudo reboot)

Let’s login to the Raspberry Pi and do some testing

- Check to see if the garagedoor_analytics.py script is running

- Open the following URL on desktop (or mobile): http://YOURIPADDRESS/garage/garage.html

- Login with the WebIOPi username and password which you set above.

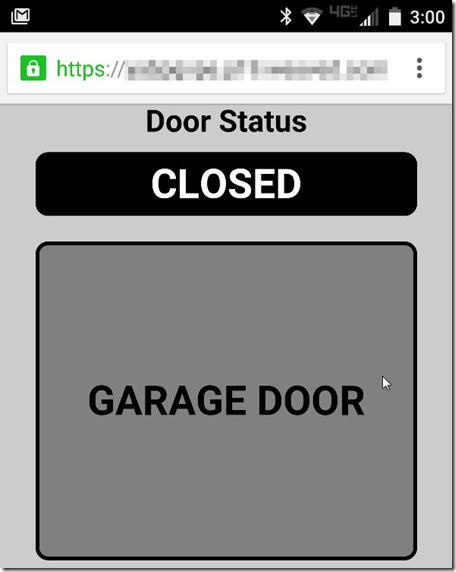

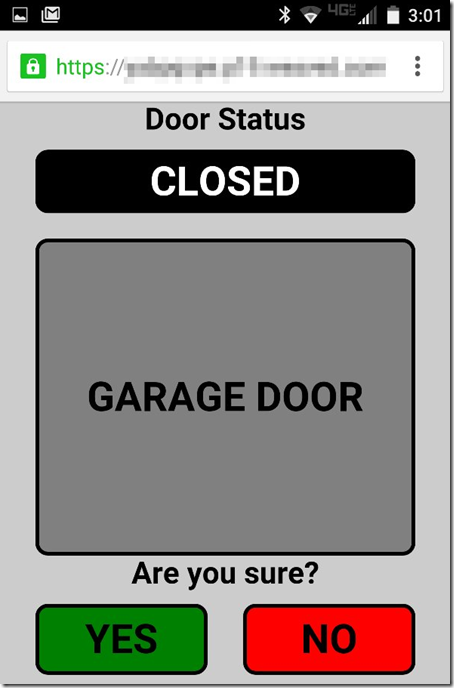

This is what we want to see. - Click “Garage Door”

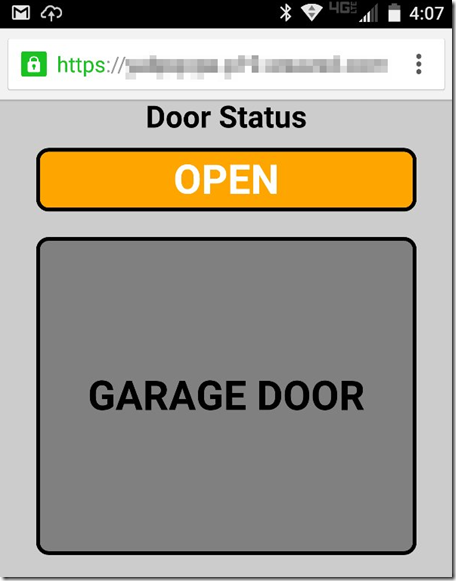

Confirm you want to open the door by clicking “Yes” - Garage door should open and status should change to “Opened”

Repeat the process to close the door.

Pretty cool and very useful. Now for the analytics.

- Go to the following URL: https://www.initialstate.com/app#/login

- Login using your credentials from the account we created earlier.

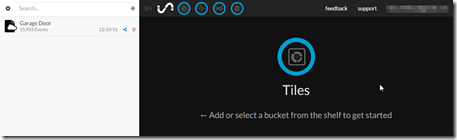

- When you login you should see something similar to the following:

Note: “Garage Door” on the left represents the bucket where all of our raw data is being streamed to. - Click on “Garage Door”

- There are number of views we can explore here.

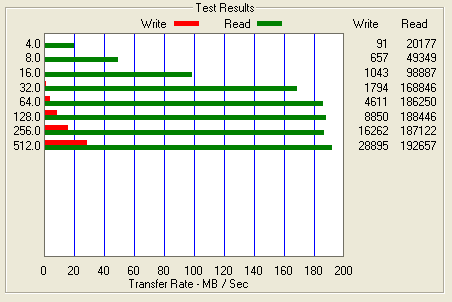

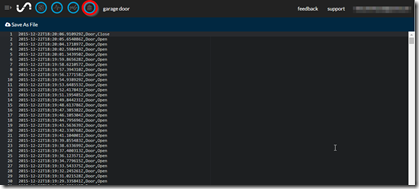

- First lest check the raw data stream:

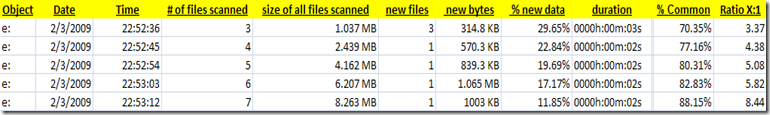

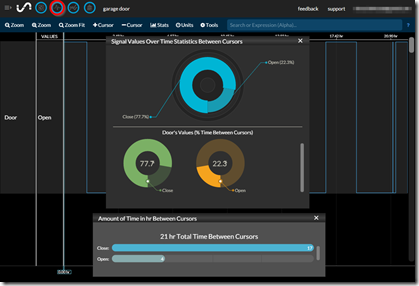

Here we see the raw data being streamed from the Raspberry Pi to our Initial State Bucket. - Next lets look at some stats from the last 24 hours.

Here I can see the state of the door my time of day, the % of the day the door was opened or closed, the number of times the doo was opened, etc…

I haven’t really started mining the data yet but I am gong to place an AMP meter on the garage door and start to use this data to determine the cost associated with use of the garage door, etc… I am thinking maybe I can do some facial recognition using the Raspberry Pi camera and OpenCV to see who is opening the door and get more precise with my analytics.

Two more item before I close out this post.

The first one being how to access the your Raspberry Pi over the internet so you can remotely check the status of your garage door and change it’s state from your mobile device. There is really nothing special about this it’s just using dynamic DNS and port forwarding.

- Find a good (possibly free but free usually comes with limitations and/or aggravation) dynamic DNS service. I use a paid noip (http://www.noip.com/) account because it integrates nicely with my router, it’s reliable and I got tired of the free version expiration every 30 days.

- This will allow you to setup a DNS name (e.g. – myhouse.ddnds.net) to reference your public IP address which assuming you have residential internet service is typically a dynamic address (meaning it can change).

- Next setup port forwarding on your internet router to forward an External Port to an Internal IP and Port

- This procedure will vary based on your router.

- Remember that WebIOPi is running on port 8000 (unless you changed it) so your forwarding rule would look something like this:

- myhouse.ddns.net:8000 >>> RPi_IP_ADDRESS:8000

- Good article on port forwarding for reference: http://goo.gl/apr8L

The last thing is a video walk-through of the system (as it exists today):

[youtube]https://youtu.be/Q5uizfiPBy4[/youtube]

I really enjoyed this project. Everything from the research, building and documentation was really fun. There were two great things for me. The first being the ability to engage my kids in something I love, they like the hands on aspect and thought it was really cool that daddy could take a bunch of parts and make something so useful. The second was actually having a device deployed which is extensible at a price point lower that what I have purchased an off-the-shelf solution for (understood that I didn’t calculate my personal time but the I would have paid to do the project).

My apologies if I left anything out, this was a long post an I am sure I missed something.

Looking forward to getting the camera implemented, it just arrived today so this will be a holiday project.