This years EMC Forum 2014 New York is approaching quickly, October 8th is just around the corner and I am really excited!!!

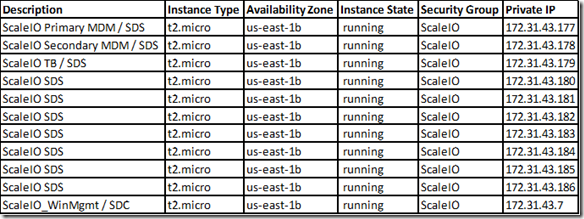

Over the years we (FusionStorm NYC) have typically prepped a demo for EMC Forum and rolled a 20U travel rack complete with networking, servers, storage arrays, and whatever else we needed for the demo to EMC Forum. In the past we’ve done topics like WAN Optimization, VMware SRM, VMware vCOps, and last year XtremSW. As a techie it’s always been cool to show the flashing lights, how things are cabled, etc… but this year it’s all about the cloud, commodity compute and and SDW (Software Defined Whatever) and elasticity which is why there will be no 20U travel rack, nothing more than a laptop and an ethernet cable that will connect to a ScaleIO 1.3 AWS (Amazon Web Services) implementation. The base configuration that I have built in AWS specifically for EMC Forum looks like this:

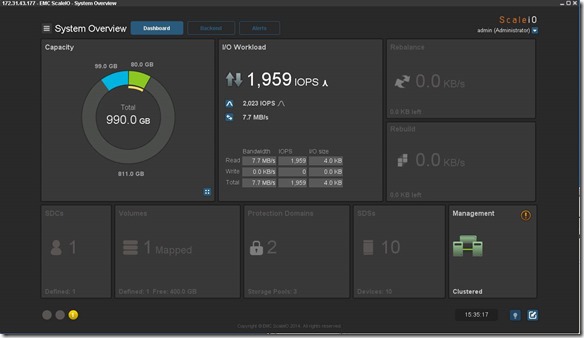

- 10 x SDS (ScaleIO Data Server) Nodes in AWS (SLES 11.3)

- Each node has 1 x 100 GB EBS SSD attached

- 1 x SDC (ScaleIO Data Client) Node in AWS (Windows 2008 R2)

- Using IOmeter and vdbench on SDC to generate workload

- Single Protection Domain: awspdomain01

- Single Pool: awspool01

- 40GB awsvol01 volume mapped to Windows SDC

Terminology:

- Meta Data Manager (MDM) – Configures and monitors the ScaleIO system. The MDM can be configured in a redundant Cluster Mode with three members on three servers, or in a Single Mode on a single server.

- ScaleIO Data Server (SDS) – Manages the capacity of a single server and acts as a backend for data access. The SDS is installed on all servers that contribute storage devices to the ScaleIO system. These devices are accessed through the SDS.

- ScaleIO Data Client (SDC) – A lightweight device driver that exposes ScaleIO volumes as block devices to the application residing on the same server on which the SDC is installed.

New Features in ScaleIO v1.30:

ScaleIO v1.30 introduces several new features, listed below. In addition, it includes internal enhancements that increase the performance, capacity usage, stability, and other storage aspects.

Thin provisioning: In v1.30, you can create volumes with thin provisioning. In addition to the on-demand nature of thin provisioning, this also yields much quicker setup and startup times.

Fault Sets: You can define a Fault Set, a group of ScaleIO Data Servers (SDSs) that are likely to go down together (For example if they are powered in the same rack), thus ensuring that ScaleIO mirroring will take place outside of this fault set.

Enhanced RAM read cache: This feature enables read caching using the SDS server RAM.

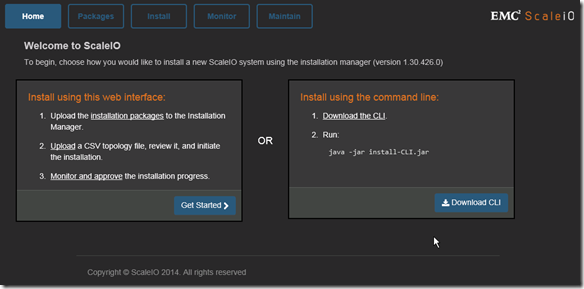

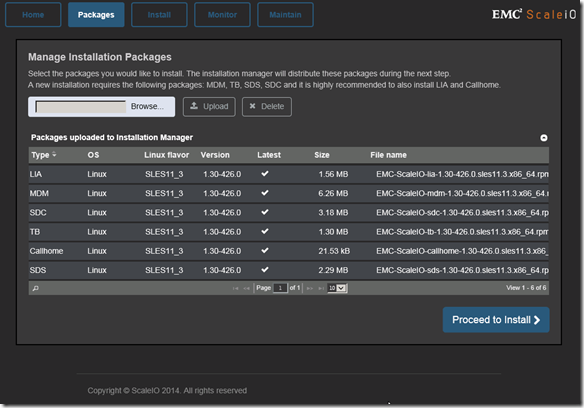

Installation, deployment, and configuration automation: Installation, deployment, and configuration has been automated and streamlined for both physical and virtual environments. The install.py installation from previous versions is no longer supported.

This is a significant improvement that dramatically improves the installation and operational management.

VMware management enhancement: A VMware, web-based plug-in communicates with the Metadata Manager (MDM) and the vSphere server to enable deployment and configuration directly from within the VMware environment.

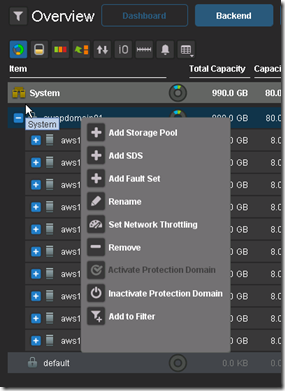

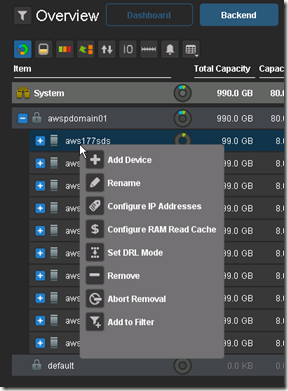

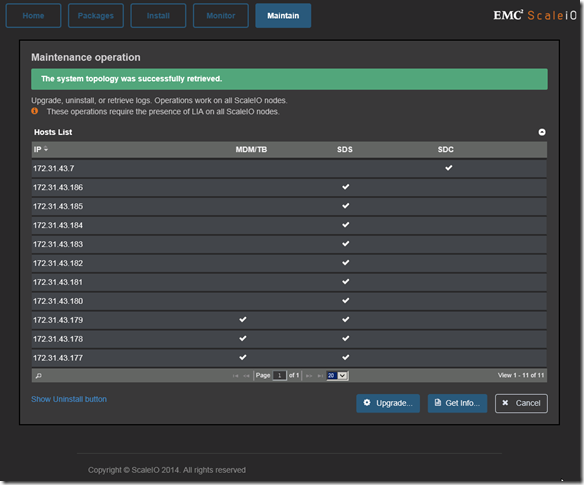

GUI enhancement: The GUI has been enhanced dramatically. In addition to monitoring, you can use the GUI to configure the backend storage elements of ScaleIO.

GUI enhancements are big!!

Active Management (huge enhancement over the v1.2 GUI):

![]() Of course you can continue to use the CLI

Of course you can continue to use the CLI ![]() :

:

- Add Protection Domain and Pool:

- scli –mdm_ip 172.31.43.177 –add_protection_domain –protection_domain_name awspdomain01

- scli –mdm_ip 172.31.43.177 –add_storage_pool –protection_domain_name awspdomain01 –storage_pool_name awspool01

- Add SDS Nodes:

- scli –mdm_ip 172.31.43.178 –add_sds –sds_ip 172.31.43.177 –protection_domain_name awspdomain01 –device_path /dev/xvdf –storage_pool_name awspool01 –sds_name aws177sds

- scli –mdm_ip 172.31.43.178 –add_sds –sds_ip 172.31.43.178 –protection_domain_name awspdomain01 –device_path /dev/xvdf –storage_pool_name awspool01 –sds_name aws178sds

- scli –mdm_ip 172.31.43.178 –add_sds –sds_ip 172.31.43.179 –protection_domain_name awspdomain01 –device_path /dev/xvdf –storage_pool_name awspool01 –sds_name aws179sds

- scli –mdm_ip 172.31.43.178 –add_sds –sds_ip 172.31.43.180 –protection_domain_name awspdomain01 –device_path /dev/xvdf –storage_pool_name awspool01 –sds_name aws180sds

- scli –mdm_ip 172.31.43.178 –add_sds –sds_ip 172.31.43.181 –protection_domain_name awspdomain01 –device_path /dev/xvdf –storage_pool_name awspool01 –sds_name aws181sds

- scli –mdm_ip 172.31.43.178 –add_sds –sds_ip 172.31.43.182 –protection_domain_name awspdomain01 –device_path /dev/xvdf –storage_pool_name awspool01 –sds_name aws182sds

- scli –mdm_ip 172.31.43.178 –add_sds –sds_ip 172.31.43.183 –protection_domain_name awspdomain01 –device_path /dev/xvdf –storage_pool_name awspool01 –sds_name aws183sds

- scli –mdm_ip 172.31.43.178 –add_sds –sds_ip 172.31.43.184 –protection_domain_name awspdomain01 –device_path /dev/xvdf –storage_pool_name awspool01 –sds_name aws184sds

- scli –mdm_ip 172.31.43.178 –add_sds –sds_ip 172.31.43.185 –protection_domain_name awspdomain01 –device_path /dev/xvdf –storage_pool_name awspool01 –sds_name aws185sds

- scli –mdm_ip 172.31.43.178 –add_sds –sds_ip 172.31.43.186 –protection_domain_name awspdomain01 –device_path /dev/xvdf –storage_pool_name awspool01 –sds_name aws186sds

- Add Volume:

- scli –mdm_ip 172.31.43.178 –add_volume –protection_domain_name awspdomain01 –storage_pool_name awspool01 –size 40 –volume_name awsvol01

- Add SDC:

- Add Windows SDC (would look different on Linux / Unix):

- C:\Program Files\EMC\ScaleIO\sdc\bin>drv_cfg.exe –add_mdm –ip 172.31.43.177,172.31.43.178

Calling kernel module to connect to MDM (172.31.43.177,172.31.43.178) - ip-172-31-43-178:~ # scli –query_all_sdc

Query all SDC returned 1 SDC nodes.

SDC ID: dea8a08300000000 Name: N/A IP: 172.31.43.7 State: Connected GUID: 363770AA-F7A2-0845-8473-158968C20EEF

Read bandwidth: 0 IOPS 0 Bytes per-second

Write bandwidth: 0 IOPS 0 Bytes per-second

- C:\Program Files\EMC\ScaleIO\sdc\bin>drv_cfg.exe –add_mdm –ip 172.31.43.177,172.31.43.178

- Add Windows SDC (would look different on Linux / Unix):

- Map Volume to SDC:

- scli –mdm_ip 172.31.43.178 –map_volume_to_sdc –volume_name awsvol01 –sdc_ip 172.31.43.7

REST API: A Representational State Transfer (REST) API can be used to expose monitoring and provisioning via the REST interface.

OpenStack support: ScaleIO includes a Cinder driver that interfaces with OpenStack, and presents volumes to OpenStack as block devices which are available for block storage. It also includes an OpenStack Nova driver, for handling compute and instance volume-related operations.

Planned shutdown of a Protection Domain: You can simply and effectively shut down an entire Protection Domain, thus preventing an unnecessary rebuild/rebalance operation.

Role-based access control: A role-based access control mechanism has been introduced.

Operationally Planned shutdown of a Protection Domain is a big enhancement!!

IP roles: For each IP address associated with an SDS, you can define the communication role that the IP address will have: Internal—between SDSs and MDMs; External—between ScaleIO Data Clients (SDCs) and SDSs; or both. This allows you to define virtual subnets.

MDM— IP address configuring: You can assign up to eight IP addresses to primary, secondary, and tie-breaker MDM servers, thus enhancing MDM communication redundancy. In addition, you can configure a specific IP address that the MDM will use to communicate with the management clients. This enables you to configure a separate management network so you can run the GUI on an external system.

Note: The above new features where taken from the Global Services Product Support Bulletin ScaleIO Software 1.30 (Access to this document likely requires a support.emc.com login). I have played with most of the new features but not all of them, IMO v1.3 provides a major a leap forward in usability.

While big iron (traditional storage arrays) probably are not going away very soon the cool factor just doesn’t come close to SDW (Software Defined Whatever) so stop by the FusionStorm booth at EMC Forum and let’s really dig into some very cool stuff.

If you are really interesting in digging into to ScaleIO one-on-one please email me (rbocchinfuso@fusionstorm.com) or tweet me @rbocchinfuso and we can setup a time where we can focus, maybe a little more than will be possible at EMC Forum.

Looking forward to seeing at the FusionStorm booth at EMC Forum 2014 New York on October 8th. If you haven’t registered for EMC Forum you should register now, Forum is only 12 days away.

![clip_image002[5] clip_image002[5]](http://gotitsolutions.org//wp-content/uploads/2014/09/clip_image0025_thumb.jpg)