It seems as if one of the most debated topics related to VMware and I/O performance is the mystery sounding the relative performance characteristics of vmfs volumes and rdm (Raw Device Mode) volumes.

Admittedly it is difficult to argue with the flexibility and operational benefits of vmfs volumes but I wanted to measure the characteristics of each approach and provide some documentation that could be leveraged when making the decision to use vmfs or rdm.? By no means are these test concluded but I thought as a gathered the data I would blog it so it could be used prior to me completing the whitepaper which all these tests will be part of.

Benchmark configuration:

The benchmarks contained in this document were performed in a lab environment with the following configuration:

- Physical Server:? Dell dual CPU 2850 w/ 4 GB RAM

- Windows 2003 SP2 Virtual Machine

- Single 2.99 Ghz CPU

- 256 MB RAM (RAM configured this low to remove the effects of kernel file system caching)

- Disk array

- EMC CLARiiON CX500

- Dedicated RAID 1 Device

- 2 LUNs Created on the RAID 1 Storage Group

- Two dedicated 10 GB file systems

- c:\benchmark\vmfs

- 10 GB .vmdk created and vmfs and NTFS file system created

- c:\benchmark\rdm

- 10 GB rdm volume mapped to VM and NTFS file system created?

- c:\benchmark\vmfs

Benchmark tools:

Benchmark tests thus far were run using?two popular?disk and file system benchmarking tools.

- IOzone (http://www.iozone.org/)

- Iozone -Rab output.wks

- HDtune (http://www.hdtune.com/)

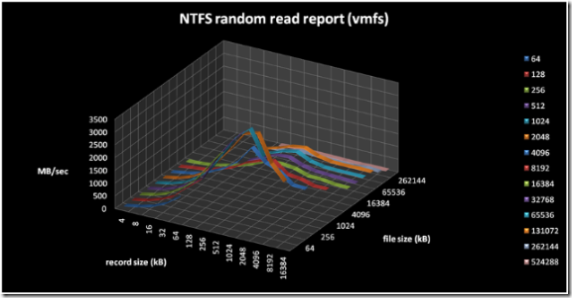

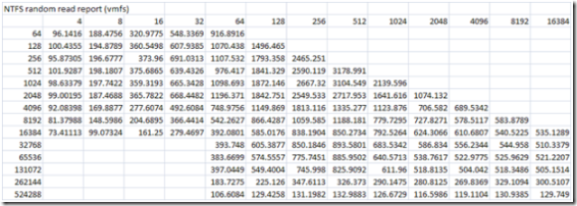

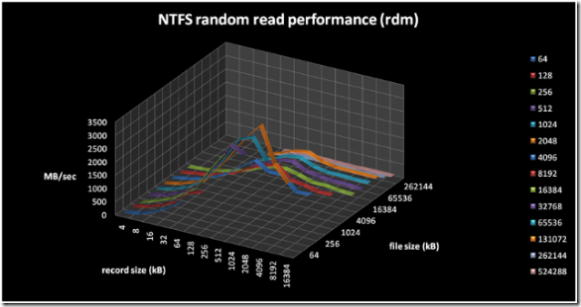

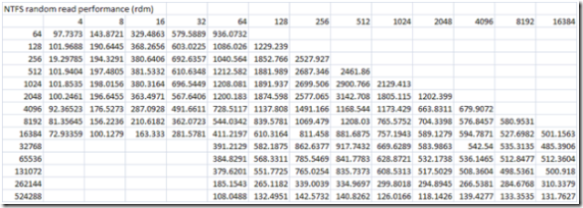

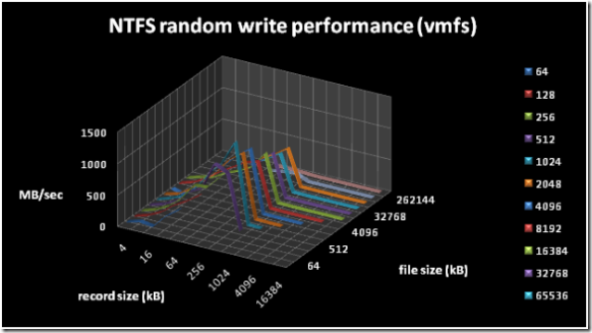

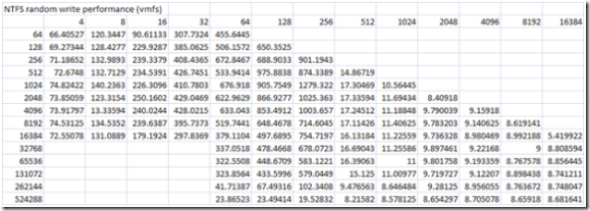

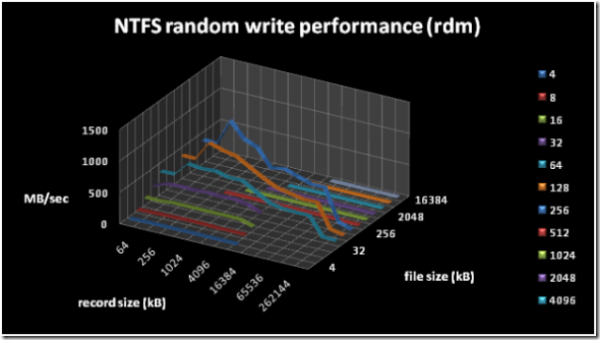

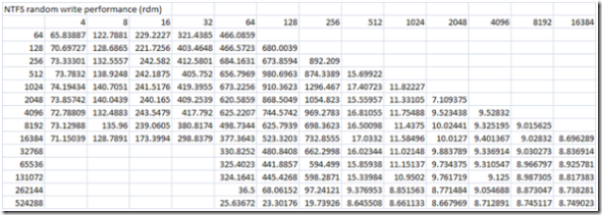

IOzone Benchmarks:

HDtune benchmarks:

HD Tune: VMware Virtual disk Benchmark

Transfer Rate Minimum : 54.1 MB/sec

Transfer Rate Maximum : 543.7 MB/sec

Transfer Rate Average : 476.4 MB/sec

Access Time : 0.4 ms

Burst Rate : 83.3 MB/sec

CPU Usage : 36.9%

HD Tune: DGC RAID 1 Benchmark

Transfer Rate Minimum : 57.1 MB/sec

Transfer Rate Maximum : 65.3 MB/sec

Transfer Rate Average : 62.4 MB/sec

Access Time : 5.4 ms

Burst Rate : 83.9 MB/sec

CPU Usage : 13.8%

One thing that is very obvious is that VMFS makes extensive use of system/kernel cache.? This is most obvious in the HDtune benchmarks.? The increased CPU utilization is a bit of a concern, most likely due to the caching overhead.? I am going to test small block random writes while monitoring CPU overhead, my gut tells me that small block random writes to a VMFS volume will tax the CPU.? More to come….

It turns out that VMFS does not do any caching for virtual disks. You can confirm this yourself by monitoring system memory usage in esxtop or /proc/vmware/mem while you run your tests. It follows that the speedup observed in the VMFS file case is due to some other reasons.

Couple of comments:

1. If what you are stating is true the vmfs performance should and would be no different than the RDM performance.

2. How do you explain this direct quote from vi_key_features_benefits.pdf document “vmfs uses volume, device, object and buffer caching to improve performance”? http://www.virtualizeasap.com/about/resources/vi_key_features_benefits.pdf

There is no doubt in my mind that vmfs is caching I/O.